OpenACC Tutorial - Profiling: Difference between revisions

No edit summary |

No edit summary |

||

| Line 94: | Line 94: | ||

======== Data collected at 100Hz frequency | ======== Data collected at 100Hz frequency | ||

}} | }} | ||

== NVIDIA NVPROF Command Line Profiler == | |||

Before working on the routine, we need to understand what the compiler is actually doing. Several questions we got to ask ourselves: | |||

* What optimizations were applied ? | |||

* What prevented further optimizations ? | |||

* Can very minor modification of the code affect the performance ? | |||

Revision as of 14:45, 7 June 2016

- Understand what profiler is

- Understand how to use PGPROF profiler.

- Understand how the code is performing .

- Understand where to focus your time and re-write most time consuming routines

- Learn how to ...

What is profiling ?

Gathering a Profile

Why would one needs to gather a profile of a code ? Because it's the only way to understand:

- Where time is being spent (Hotspots)

- How the code is performing

- Where to focus your time

What is so important about the hotspots of the code ? The Amdahl's law says that "Parallelizing the most time-consuming (i.e. the hotspots) routines will have the most impact".

Build the Sample Code ?

For this example we will use a code from the repositories. Download the package and change to the cpp or f90 directory. The point of this exercise is to compile&link the code, obtain executable, and then profile them.

As of May 2016, compiler support for OpenACC is still relatively scarce. Being pushed by NVidia, through its Portland Group division, as well as by Cray, these two lines of compilers offer the most advanced OpenACC support. GNU Compiler support for OpenACC exists, but is considered experimental in version 5. It is expected to be officially supported in version 6 of the compiler.

For the purpose of this tutorial, we use version 16.3 of the Portland Group compilers. We note that Portland Group compilers are free for academic usage.

[name@server ~]$ make

pgc++ -fast -c -o main.o main.cpp

"vector.h", line 30: warning: variable "vcoefs" was declared but never

referenced

double *vcoefs=v.coefs;

^

pgc++ main.o -o cg.x -fast

After the executable is created, we are going to profile that code.

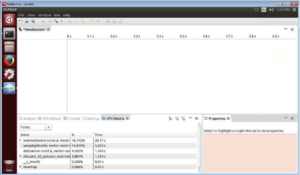

For the purpose of this tutorial, we use a PGPROF, a powerful and simple analyzer for parallel programs written with OpenMP or OpenACC directives, or with CUDA We note that Portland Group Profiler is free for academic usage.

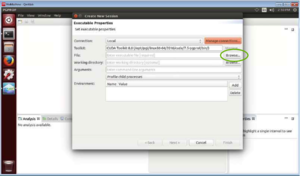

Bellow are several snapshots demonstrating how to start with the PGPROF profiler. First step is to initiate a new session. Then browse for an executable file of the code you want to profile.

Then specify the profiling options. For example, if you need to profile CPU activity then set the "Profile execution of the CPU" box.

NVIDIA Visual Profiller

Another profiler available for OpenACC applications is NVIDIA Visual Profiler. It's a cross-platform analyzing tool for the codes written with OpenACC and CUDA C/C++ instructions.

NVIDIA NVPROF Command Line Profiler

NVIDIA also provides a command line version called NVPROF, similar to GPU prof

[name@server ~]$ nvprof --cpu-profiling on ./cgi.x

<Program output >

======== CPU profiling result (bottom up):

84.25% matvec(matrix const &, vector const &, vector const &)

84.25% main

9.50% waxpby(double, vector const &, double, vector const &, vector const &)

3.37% dot(vector const &, vector const &)

2.76% allocate_3d_poisson_matrix(matrix&, int)

2.76% main

0.11% __c_mset8

0.03% munmap

0.03% free_matrix(matrix&)

0.03% main

======== Data collected at 100Hz frequency

NVIDIA NVPROF Command Line Profiler

Before working on the routine, we need to understand what the compiler is actually doing. Several questions we got to ask ourselves:

- What optimizations were applied ?

- What prevented further optimizations ?

- Can very minor modification of the code affect the performance ?