MPI: Difference between revisions

(Started adding MPI content from the Sharcnet regional guide.) |

(No difference)

|

Revision as of 18:21, 15 September 2016

A Primer on Parallel Programming[edit]

The most significant hurdle to overcome in learning to design and build parallel applications boils down to a very simple concept: communication. To quote Gropp, Lusk and Skjellum from their seminal reference (Using MPI): "to pull a bigger wagon it is easier to add more oxen that to find (or build) a bigger ox".

Most people would see this as simply common sense. In order to build a house as quickly as possible we do not look to a faster person to do all the construction more quickly, we use multiple people and spread the work among them so that tasks are being performed in parallel. Computational problems are superficially the same thing. There is a limit to how big and fast we can expect to build a single machine, so we attempt to partition the problem and assign work to be completed concurrently to multiple computers.

Complexity arises due to communication requirements. In order for multiple workers to accomplish a task in parallel, they need to be able to communicate with one another. In the context of software, we have many processes each working on part of a solution, yet needing values that were computed by other processes or needing to provide values that were computed locally to its peers.

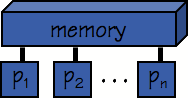

There are two major architectural paradigms in which we typically leverage computational parallelism. A shared memory architecture (commonly abbreviated SMP, although there are a variety of shared memory models implied by this) is one in which all memory is globally addressable from the programmer's point of view (i.e. all of processors see the same memory image). Communication between processes on an SMP machine is implicit --- any process can read and write values to memory that can be subsequently manipulated directly by others. The challenges in writing these kinds of programs is related to data consistency: one must take steps to insure data cannot be modified by more than one processor at a time for example.

The other architecture is equivalent to a collection of workstations linked by a dedicated network for communication: a cluster. In this model, processes can be running on physically distinct machines with their own private memory. When processes need to communicate, it is explicit. A processes wishing to send data will need to invoke a function specifically to send it, and the destination process will have to invoke a function to receive it (this process is commonly referred to as message passing). Challenges faced in cluster programming are related to minimizing communication overhead. Networks, even the fastest dedicated hardware interconnects, transmit data at speeds that are an order of magnitude slower than would be the case within a single machine (consider that memory access times are measured in, typically on the order of one to hundreds of, nanoseconds, while network latency is expressed in microseconds).

With these basic concepts out of the way, the remainder of this tutorial will consider basic issues of programming on cluster, using the Message Passing Interface.

What is MPI?[edit]

The Message Passing Interface (MPI) is a library providing message passing support for parallel/distributed applications running on a cluster (strictly speaking, MPI is not restricted to use on clusters however we will ignore such fine detail in this tutorial). MPI is not a language; rather, it is a collection of subroutines in Fortran, functions/macros in C, and objects in C++ that implement explicit communication between processes.

On the plus side, MPI is an open standard which promotes portability, scalability of applications that use it is generally good, and because memory is local to each process some aspects of debugging are simplified (it isn't possible for one process to interfere with the memory of another, and if a program generates a segmentation fault the resulting core file can be processed by standard serial debugging tools). Conversely, due to the need to explicitly manage communication and synchronization, MPI tends to be seen as slightly more complex than implicit techniques, and the problems of communication overhead can, if ignored, quickly overwhelm any speed-up from parallel computation.

As we turn now to consider the basics of programming with MPI, we will attempt to highlight a few of the issues that arise and discuss strategies to avoid them. Suggested references (both printed and online) are presented at the end of this tutorial and the reader is encouraged to consult them for additional information.

MPI Programming Basics[edit]

MPI bindings are defined for Fortran, C and C++. This tutorial will present the development of code in the most commonly used C and Fortran, however these concepts apply directly to whatever language you are using. Function names and constants are standardized, so generalizing this tutorial to another language should be straightforward assuming the programmer is already familiar with the language of interest.

In the interest of simplicity and illustrating key concepts, our goal will be to parallelize the venerable "Hello, World!" program, which appears below for reference.

| C CODE: hello.c | FORTRAN CODE: hello.f |

|---|---|

#include <stdio.h>

int main()

{

printf("Hello, world!\n");

return(0);

}

|

program hello

print *, 'Hello, world!'

end program hello

|

When compiled and run as shown in the image below, the output of this program looks something like this:

[orc-login1 ~]$ vi hello.c [orc-login1 ~]$ cc -Wall hello.c -o hello [orc-login1 ~]$ ./hello Hello, world!

SPMD Programming[edit]

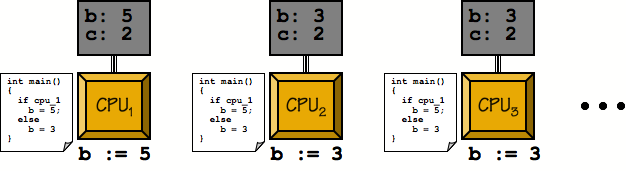

Parallel programs written using MPI make use of an execution model called Single Program, Multiple Data, or SPMD. Rather than have to write some number of applications that then run in parallel, the SPMD model involves starting up a number of copies of the same program. All running processes in a MPI job are assigned a unique integer identifier, referred to as the rank of the process, and a process can obtain this value at run-time. Where the behaviour of the program diverges on different processors, conditional statements based on the rank of the process are used so that each process executes the appropriate instructions.

Framework[edit]

In order to make use of the MPI facilities, we must include the relevant header file (mpi.h for C/C++, mpif.h for Fortran) and link the MPI library during compilation/linkage. While it is perfectly reasonable to do this all manually, most MPI implementation provide a handy script (C: mpicc, Fortran: mpif90, C++: mpiCC) for compiling that handles all set-up issues with respect to include and library directories, appropriate library linkage, etc. Our examples will all use this script, and it is recommended that you do the same barring some issue that requires you do it manually.

Note that other than the MPI library we linked to our executable, there is nothing coordinating the activities of the running programs. This is performed cooperatively inside the MPI library of the running processes. We are thus required to explicitly initialize this process by calling an initialization function before we make use of any MPI features in our code. The prototype for this function appears below:

| C API | FORTRAN API |

|---|---|

int MPI_Init(int *argc, char **argv[]);

|

MPI_INIT(IERR)

INTEGER :: IERR

|

The arguments to the C function are pointers to the same argc and argv variables that represent the command-line arguments to the program. This is to permit MPI to "fix" the command-line arguments in the case where the job launcher may have modified them. It is worth noting that C MPI functions returns the error status of the function explicitly; All Fortran functions take an additional argument, IERR in which it records the error status of the function before it returns.

Similarly, we must call a function to allow the library to do any clean-up that might be required before our program exits. The prototype for this function, which takes no arguments other than the error parameter in Fortran, appears below:

| C API | FORTRAN API |

|---|---|

int MPI_Finalize(void);

|

MPI_FINALIZE(IERR)

INTEGER :: IERR

|

As a rule of thumb, it is a good idea to perform the initialization as the first statement of our program, and the finalize operation as the last statement before program termination. Let's take a moment and modify our Hello, world! program to take these basic issues into consideration.

| C CODE: phello0.c | FORTRAN CODE: phello0.f |

|---|---|

#include <stdio.h>

#include <mpi.h>

int main(int argc, char *argv[])

{

MPI_Init(&argc, &argv);

printf("Hello, world!\n");

MPI_Finalize();

return(0);

}

|

program phello0

include "mpif.h"

integer :: ierror

call MPI_INIT(ierror)

print *, 'Hello, world!'

call MPI_FINALIZE(ierror)

end program phello0

|

Rank and Size[edit]

While it is now possible to run this program under control of MPI, each process will still just output the original string which isn't very interesting. Let's begin by having each process output its rank and how many processes are running in total. This information is obtained at run-time by the use of the following functions.

| C API | FORTRAN API |

|---|---|

int MPI_Comm_size(MPI_Comm comm, int *nproc);

int MPI_Comm_rank(MPI_Comm comm, int *myrank);

|

MPI_COMM_SIZE(COMM, NPROC, IERR)

INTEGER :: COMM, NPROC, IERR

MPI_COMM_RANK(COMM, RANK, IERR)

INTEGER :: COMM, RANK, IERR

|

MPI_Comm_size will report the number of processes running as part of this job by assigning it to the result parameter nproc. Similarly, MPI_Comm_rank reports the rank of the calling process to the result parameter myrank. Ranks in MPI start counting from 0 rather than 1, so given N processes we expect the ranks to be 0..(N-1). The comm argument is a communicator, which is a set of processes capable of sending messages to one another. For the purpose of this tutorial we will always pass in the predefined value MPI_COMM_WORLD, which is simply all the processes started with the job (it is possible to define and use your own communicators, however that is beyond the scope of this tutorial and the reader is referred to the provided references for additional detail).

Let us incorporate these functions into our program, and have each process output its rank and size information. Note that since we are still having all processes perform the same function, there are no conditional blocks required in the code.

| C CODE: phello1.c | FORTRAN CODE: phello1.f |

|---|---|

#include <stdio.h>

#include <mpi.h>

int main(int argc, char *argv[])

{

int rank, size;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &size);

printf("Hello, world! "

"from process %d of %d\n", rank, size);

MPI_Finalize();

return(0);

}

|

program phello1

include "mpif.h"

integer :: rank, size, ierror

call MPI_INIT(ierror)

call MPI_COMM_SIZE(MPI_COMM_WORLD, size, ierror)

call MPI_COMM_RANK(MPI_COMM_WORLD, rank, ierror)

print *, 'Hello from process ', rank, ' of ', size

call MPI_FINALIZE(ierror)

end program phello1

|

Compile and run this program on 2, 4 and 8 processors. Note that each running process produces output based on the values of its local variables, as one should expect. As you run the program on more processors, you will start to see that the order of the output from the different processes is not regular. The stdout of all running processes is simply concatenated together; you should make no assumptions about the order of output from different processes.