Running jobs: Difference between revisions

No edit summary |

(Marked this version for translation) |

||

| (252 intermediate revisions by 27 users not shown) | |||

| Line 2: | Line 2: | ||

<translate> | <translate> | ||

<!--T:54--> | |||

This page is intended for the user who is already familiar with the concepts of job scheduling and job scripts, and who wants guidance on submitting jobs to our clusters. | |||

If you have not worked on a large shared computer cluster before, you should probably read [[What is a scheduler?]] first. | |||

<!--T:112--> | |||

{{box|<b>All jobs must be submitted via the scheduler!</b> | |||

<br> | |||

Exceptions are made for compilation and other tasks not expected to consume more than about 10 CPU-minutes and about 4 gigabytes of RAM. Such tasks may be run on a login node. In no case should you run processes on compute nodes except via the scheduler.}} | |||

<!--T:55--> | |||

On | On our clusters, the job scheduler is the | ||

[https://en.wikipedia.org/wiki/Slurm_Workload_Manager Slurm Workload Manager]. | |||

Comprehensive [https://slurm.schedmd.com/documentation.html documentation for Slurm] is maintained by SchedMD. If you are coming to Slurm from PBS/Torque, SGE, LSF, or LoadLeveler, you might find this table of [https://slurm.schedmd.com/rosetta.pdf corresponding commands] useful. | |||

<!--T: | ==Use <code>sbatch</code> to submit jobs== <!--T:56--> | ||

The command to submit a job is [https://slurm.schedmd.com/sbatch.html <code>sbatch</code>]: | |||

<source lang="bash"> | |||

$ sbatch simple_job.sh | |||

Submitted batch job 123456 | |||

</source> | |||

<!--T: | <!--T:57--> | ||

A minimal Slurm job script looks like this: | |||

{{File | {{File | ||

|name=simple_job.sh | |name=simple_job.sh | ||

| Line 18: | Line 30: | ||

|contents= | |contents= | ||

#!/bin/bash | #!/bin/bash | ||

#SBATCH --time=00: | #SBATCH --time=00:15:00 | ||

#SBATCH --account=def-someuser | |||

echo 'Hello, world!' | echo 'Hello, world!' | ||

sleep 30 | sleep 30 | ||

}} | }} | ||

<!--T:58--> | |||

On general-purpose (GP) clusters, this job reserves 1 core and 256MB of memory for 15 minutes. On [[Niagara]], this job reserves the whole node with all its memory. | |||

Directives (or <i>options</i>) in the job script are prefixed with <code>#SBATCH</code> and must precede all executable commands. All available directives are described on the [https://slurm.schedmd.com/sbatch.html sbatch page]. Our policies require that you supply at least a time limit (<code>--time</code>) for each job. You may also need to supply an account name (<code>--account</code>). See [[#Accounts and projects|Accounts and projects]] below. | |||

<!--T: | <!--T:59--> | ||

You can also specify directives as command-line arguments to <code>sbatch</code>. So for example, | |||

$ sbatch --time=00:30:00 simple_job.sh | |||

will submit the above job script with a time limit of 30 minutes. The acceptable time formats include "minutes", "minutes:seconds", "hours:minutes:seconds", "days-hours", "days-hours:minutes" and "days-hours:minutes:seconds". Please note that the time limit will strongly affect how quickly the job is started, since longer jobs are [[Job_scheduling_policies|eligible to run on fewer nodes]]. | |||

<!--T:114--> | |||

Please be cautious if you use a script to submit multiple Slurm jobs in a short time. Submitting thousands of jobs at a time can cause Slurm to become [[Frequently_Asked_Questions#sbatch:_error:_Batch_job_submission_failed:_Socket_timed_out_on_send/recv_operation|unresponsive]] to other users. Consider using an [[Running jobs#Array job|array job]] instead, or use <code>sleep</code> to space out calls to <code>sbatch</code> by one second or more. | |||

<!--T: | === Memory === <!--T:161--> | ||

<!--T:106--> | |||

Memory may be requested with <code>--mem-per-cpu</code> (memory per core) or <code>--mem</code> (memory per node). On general-purpose (GP) clusters, a default memory amount of 256 MB per core will be allocated unless you make some other request. On [[Niagara]], only whole nodes are allocated along with all available memory, so a memory specification is not required there. | |||

<!--T: | <!--T:162--> | ||

A common source of confusion comes from the fact that some memory on a node is not available to the job (reserved for the OS, etc.). The effect of this is that each node type has a maximum amount available to jobs; for instance, nominally "128G" nodes are typically configured to permit 125G of memory to user jobs. If you request more memory than a node-type provides, your job will be constrained to run on higher-memory nodes, which may be fewer in number. | |||

<!--T:163--> | |||

Adding to this confusion, Slurm interprets K, M, G, etc., as [https://en.wikipedia.org/wiki/Binary_prefix binary prefixes], so <code>--mem=125G</code> is equivalent to <code>--mem=128000M</code>. See the <i>Available memory</i> column in the <i>Node characteristics</i> table for each GP cluster for the Slurm specification of the maximum memory you can request on each node: [[Béluga/en#Node_characteristics|Béluga]], [[Cedar#Node_characteristics|Cedar]], [[Graham#Node_characteristics|Graham]], [[Narval/en#Node_characteristics|Narval]]. | |||

==Use <code>squeue</code> or <code>sq</code> to list jobs== <!--T:60--> | |||

<!--T:61--> | |||

The general command for checking the status of Slurm jobs is <code>squeue</code>, but by default it supplies information about <b>all</b> jobs in the system, not just your own. You can use the shorter <code>sq</code> to list only your own jobs: | |||

<!--T:62--> | |||

<source lang="bash"> | <source lang="bash"> | ||

$ sq | |||

JOBID USER ACCOUNT NAME ST TIME_LEFT NODES CPUS GRES MIN_MEM NODELIST (REASON) | |||

123456 smithj def-smithj simple_j R 0:03 1 1 (null) 4G cdr234 (None) | |||

123457 smithj def-smithj bigger_j PD 2-00:00:00 1 16 (null) 16G (Priority) | |||

</source> | </source> | ||

<!--T:12--> | <!--T:12--> | ||

The ST column of the output shows the status of each job. The two most common states are PD for <i>pending</i> or R for <i>running</i>. | |||

<!--T:167--> | |||

If you want to know more about the output of <code>sq</code> or <code>squeue</code>, or learn how to change the output, see the [https://slurm.schedmd.com/squeue.html online manual page for squeue]. <code>sq</code> is a local customization. | |||

<!--T: | <!--T:115--> | ||

<b>Do not</b> run <code>sq</code> or <code>squeue</code> from a script or program at high frequency (e.g. every few seconds). Responding to <code>squeue</code> adds load to Slurm, and may interfere with its performance or correct operation. See [[#Email_notification|Email notification]] below for a much better way to learn when your job starts or ends. | |||

<!--T: | ==Where does the output go?== <!--T:63--> | ||

<!--T:64--> | |||

By default the output is placed in a file named "slurm-", suffixed with the job ID number and ".out" (e.g. <code>slurm-123456.out</code>), in the directory from which the job was submitted. | |||

Having the job ID as part of the file name is convenient for troubleshooting. | |||

<!--T: | <!--T:176--> | ||

A different name or location can be specified if your workflow requires it by using the <code>--output</code> directive. | |||

Certain replacement symbols can be used in a filename specified this way, such as the job ID number, the job name, or the [[Job arrays|job array]] task ID. | |||

See the [https://slurm.schedmd.com/sbatch.html vendor documentation on sbatch] for a complete list of replacement symbols and some examples of their use. | |||

<!--T:16--> | <!--T:16--> | ||

Error output will normally appear in the same file, just as it would if you were typing commands interactively. If you | Error output will normally appear in the same file as standard output, just as it would if you were typing commands interactively. If you want to send the standard error channel (stderr) to a separate file, use <code>--error</code>. | ||

==Accounts and projects== <!--T:66--> | |||

<!--T:67--> | |||

Every job must have an associated account name corresponding to a [[Frequently_Asked_Questions_about_the_CCDB#What_is_a_RAP.3F|Resource Allocation Project]] (RAP). If you are a member of only one account, the scheduler will automatically associate your jobs with that account. | |||

<!--T:107--> | |||

If you receive one of the following messages when you submit a job, then you have access to more than one account: | |||

<pre> | |||

You are associated with multiple _cpu allocations... | |||

Please specify one of the following accounts to submit this job: | |||

</pre> | |||

<!--T:108--> | |||

<pre> | |||

You are associated with multiple _gpu allocations... | |||

Please specify one of the following accounts to submit this job: | |||

</pre> | |||

<!--T: | <!--T:173--> | ||

In this case, use the <code>--account</code> directive to specify one of the accounts listed in the error message, e.g.: | |||

#SBATCH --account=def-user-ab | |||

<!--T:68--> | |||

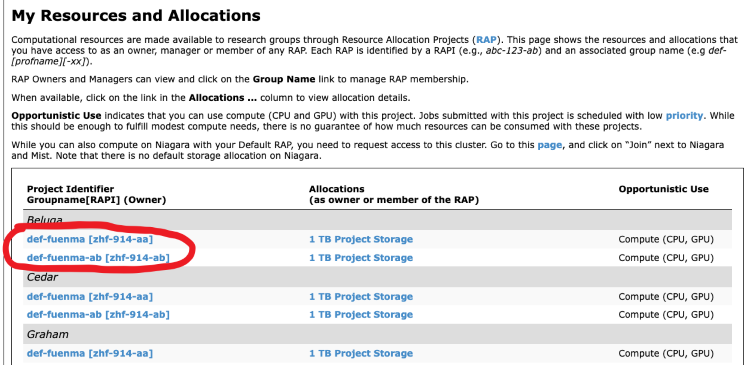

To find out which account name corresponds | |||

to a given Resource Allocation Project, log in to [https://ccdb.alliancecan.ca CCDB] | |||

and click on <i>My Account -> My Resources and Allocations</i>. You will see a list of all the projects | |||

you are a member of. The string you should use with the <code>--account</code> for | |||

a given project is under the column <i>Group Name</i>. Note that a Resource | |||

Allocation Project may only apply to a specific cluster (or set of clusters) and therefore | |||

may not be transferable from one cluster to another. | |||

<!--T:69--> | |||

In the illustration below, jobs submitted with <code>--account=def-fuenma</code> will be accounted against RAP zhf-914-aa | |||

<!--T:70--> | |||

[[File:Find-group-name-EN.png|750px|frame|left| Finding the group name for a Resource Allocation Project (RAP)]] | |||

<br clear=all> <!-- This is to prevent the next section from filling to the right of the image. --> | |||

<!--T:71--> | |||

If you plan to use one account consistently for all jobs, once you have determined the right account name you may find it convenient to set the following three environment variables in your <code>~/.bashrc</code> file: | |||

export SLURM_ACCOUNT=def-someuser | |||

export SBATCH_ACCOUNT=$SLURM_ACCOUNT | |||

export SALLOC_ACCOUNT=$SLURM_ACCOUNT | |||

Slurm will use the value of <code>SBATCH_ACCOUNT</code> in place of the <code>--account</code> directive in the job script. Note that even if you supply an account name inside the job script, <i>the environment variable takes priority.</i> In order to override the environment variable, you must supply an account name as a command-line argument to <code>sbatch</code>. | |||

<!--T:72--> | |||

<code>SLURM_ACCOUNT</code> plays the same role as <code>SBATCH_ACCOUNT</code>, but for the <code>srun</code> command instead of <code>sbatch</code>. The same idea holds for <code>SALLOC_ACCOUNT</code>. | |||

== Examples of job scripts == <!--T:17--> | == Examples of job scripts == <!--T:17--> | ||

=== | === Serial job === <!--T:146--> | ||

A serial job is a job which only requests a single core. It is the simplest type of job. The "simple_job.sh" which appears above in [[#Use_sbatch_to_submit_jobs|Use sbatch to submit jobs]] is an example. | |||

<!--T: | === Array job === <!--T:27--> | ||

Also known as a <i>task array</i>, an array job is a way to submit a whole set of jobs with one command. The individual jobs in the array are distinguished by an environment variable, <code>$SLURM_ARRAY_TASK_ID</code>, which is set to a different value for each instance of the job. The following example will create 10 tasks, with values of <code>$SLURM_ARRAY_TASK_ID</code> ranging from 1 to 10: | |||

<!--T: | <!--T:147--> | ||

{{File | {{File | ||

|name= | |name=array_job.sh | ||

|lang="sh" | |lang="sh" | ||

|contents= | |contents= | ||

#!/bin/bash | #!/bin/bash | ||

#SBATCH -- | #SBATCH --account=def-someuser | ||

#SBATCH -- | #SBATCH --time=0-0:5 | ||

#SBATCH -- | #SBATCH --array=1-10 | ||

./myapplication $SLURM_ARRAY_TASK_ID | |||

}} | }} | ||

<!--T: | <!--T:142--> | ||

For more examples, see [[Job arrays]]. See [https://slurm.schedmd.com/job_array.html Job Array Support] for detailed documentation. | |||

=== Threaded or OpenMP job === <!--T:21--> | === Threaded or OpenMP job === <!--T:21--> | ||

This example script launches a single process with | This example script launches a single process with eight CPU cores. Bear in mind that for an application to use OpenMP it must be compiled with the appropriate flag, e.g. <code>gcc -fopenmp ...</code> or <code>icc -openmp ...</code> | ||

<!--T:22--> | <!--T:22--> | ||

| Line 125: | Line 175: | ||

|contents= | |contents= | ||

#!/bin/bash | #!/bin/bash | ||

#SBATCH --account=def-someuser | |||

#SBATCH --time=0-0:5 | #SBATCH --time=0-0:5 | ||

#SBATCH --cpus-per-task= | #SBATCH --cpus-per-task=8 | ||

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK | export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK | ||

./ompHello | ./ompHello | ||

}} | }} | ||

<!--T: | === MPI job === <!--T:18--> | ||

<!--T:51--> | |||

This example | This example script launches four MPI processes, each with 1024 MB of memory. The run time is limited to 5 minutes. | ||

<!--T: | <!--T:19--> | ||

{{File | {{File | ||

|name= | |name=mpi_job.sh | ||

|lang="sh" | |lang="sh" | ||

|contents= | |contents= | ||

#!/bin/bash | #!/bin/bash | ||

#SBATCH -- | #SBATCH --account=def-someuser | ||

#SBATCH --mem= | #SBATCH --ntasks=4 # number of MPI processes | ||

#SBATCH --time=0-05 | #SBATCH --mem-per-cpu=1024M # memory; default unit is megabytes | ||

#SBATCH --time=0-00:05 # time (DD-HH:MM) | |||

srun ./mpi_program # mpirun or mpiexec also work | |||

}} | }} | ||

<!--T: | <!--T:20--> | ||

Large MPI jobs, specifically those which can efficiently use whole nodes, should use <code>--nodes</code> and <code>--ntasks-per-node</code> instead of <code>--ntasks</code>. Hybrid MPI/threaded jobs are also possible. For more on these and other options relating to distributed parallel jobs, see [[Advanced MPI scheduling]]. | |||

<!--T: | <!--T:23--> | ||

For more on running | For more on writing and running parallel programs with OpenMP, see [[OpenMP]]. | ||

=== | === GPU job === <!--T:24--> | ||

There are many options involved in requesting GPUs because | |||

* the GPU-equipped nodes at [[Cedar]] and [[Graham]] have different configurations, | |||

* there are two different configurations at Cedar, and | |||

*there are different policies for the different Cedar GPU nodes. | |||

Please see [[Using GPUs with Slurm]] for a discussion and examples of how to schedule various job types on the available GPU resources. | |||

== Interactive jobs == <!--T:28--> | == Interactive jobs == <!--T:28--> | ||

Though batch submission is the most common and most efficient way to take advantage of our clusters, interactive jobs are also supported. These can be useful for things like: | Though batch submission is the most common and most efficient way to take advantage of our clusters, interactive jobs are also supported. These can be useful for things like: | ||

* Data exploration at the command line | * Data exploration at the command line | ||

* Interactive | * Interactive console tools like R and iPython | ||

* Significant software development, debugging, or compiling | * Significant software development, debugging, or compiling | ||

<!--T:29--> | <!--T:29--> | ||

You can start an interactive session on a compute node with [https://slurm.schedmd.com/ | You can start an interactive session on a compute node with [https://slurm.schedmd.com/salloc.html salloc]. In the following example we request one task, which corresponds to one CPU cores and 3 GB of memory, for an hour: | ||

$ salloc --time=1:0:0 --mem-per-cpu=3G --ntasks=1 --account=def-someuser | |||

salloc: Granted job allocation 1234567 | salloc: Granted job allocation 1234567 | ||

$ ... # do some work | |||

$ exit # terminate the allocation | |||

salloc: Relinquishing job allocation 1234567 | salloc: Relinquishing job allocation 1234567 | ||

<!--T: | <!--T:129--> | ||

It is also possible to run graphical programs interactively on a compute node by adding the <b>--x11</b> flag to your <code>salloc</code> command. In order for this to work, you must first connect to the cluster with X11 forwarding enabled (see the [[SSH]] page for instructions on how to do that). Note that an interactive job with a duration of three hours or less will likely start very soon after submission as we have dedicated test nodes for jobs of this duration. Interactive jobs that request more than three hours run on the cluster's regular set of nodes and may wait for many hours or even days before starting, at an unpredictable (and possibly inconvenient) hour. | |||

== Monitoring jobs == <!--T:31--> | == Monitoring jobs == <!--T:31--> | ||

=== Current jobs === <!--T:148--> | |||

<!--T:32--> | <!--T:32--> | ||

By default [https://slurm.schedmd.com/squeue.html squeue] will show all the jobs the scheduler is managing at the moment. It | By default [https://slurm.schedmd.com/squeue.html squeue] will show all the jobs the scheduler is managing at the moment. It will run much faster if you ask only about your own jobs with | ||

squeue -u < | $ squeue -u $USER | ||

You can also use the utility <code>sq</code> to do the same thing with less typing. | |||

<!--T:33--> | <!--T:33--> | ||

You can show only running jobs, or only pending jobs: | You can show only running jobs, or only pending jobs: | ||

squeue -u <username> -t RUNNING | $ squeue -u <username> -t RUNNING | ||

squeue -u <username> -t PENDING | $ squeue -u <username> -t PENDING | ||

<!--T:34--> | <!--T:34--> | ||

You can show detailed information for a specific job with [https://slurm.schedmd.com/scontrol.html scontrol]: | You can show detailed information for a specific job with [https://slurm.schedmd.com/scontrol.html scontrol]: | ||

scontrol show | $ scontrol show job -dd <jobid> | ||

<!--T:160--> | |||

<b>Do not</b> run <code>squeue</code> from a script or program at high frequency (e.g., every few seconds). Responding to <code>squeue</code> adds load to Slurm and may interfere with its performance or correct operation. | |||

<!--T: | ==== Email notification ==== <!--T:149--> | ||

<!--T:36--> | <!--T:36--> | ||

You can ask to be notified by email of certain job conditions by supplying options to | You can ask to be notified by email of certain job conditions by supplying options to sbatch: | ||

#SBATCH --mail-user=your.email@example.com | |||

#SBATCH --mail-user= | |||

#SBATCH --mail-type=ALL | #SBATCH --mail-type=ALL | ||

Please '''do not turn on these options''' unless you are going to read the emails they generate! | |||

We occasionally have email service providers (Google, Yahoo, etc) restrict the flow of mail from our domains | |||

because one user is generating a huge volume of unnecessary emails via these options. | |||

<!--T:182--> | |||

For a complete list of the options for <code>--mail-type</code> see [https://slurm.schedmd.com/sbatch.html#OPT_mail-type SchedMD's documentation]. | |||

==== Output buffering ==== <!--T:168--> | |||

<!--T:169--> | |||

Output from a non-interactive Slurm job is normally <i>buffered</i>, which means that there is usually a delay between when data is written by the job and when you can see the output on a login node. Depending on the application, you are running and the load on the filesystem, this delay can range from less than a second to many minutes, or until the job completes. | |||

<!--T:170--> | |||

There are methods to reduce or eliminate the buffering, but we do not recommend using them because buffering is vital to preserving the overall performance of the filesystem. If you need to monitor the output from a job in <i>real time</i>, we recommend you run an [[#Interactive_jobs|interactive job]] as described above. | |||

=== Completed jobs === <!--T:150--> | |||

<!--T:151--> | |||

Get a short summary of the CPU and memory efficiency of a job with <code>seff</code>: | |||

$ seff 12345678 | |||

Job ID: 12345678 | |||

Cluster: cedar | |||

User/Group: jsmith/jsmith | |||

State: COMPLETED (exit code 0) | |||

Cores: 1 | |||

CPU Utilized: 02:48:58 | |||

CPU Efficiency: 99.72% of 02:49:26 core-walltime | |||

Job Wall-clock time: 02:49:26 | |||

Memory Utilized: 213.85 MB | |||

Memory Efficiency: 0.17% of 125.00 GB | |||

<!--T:35--> | |||

Find more detailed information about a completed job with [https://slurm.schedmd.com/sacct.html sacct], and optionally, control what it prints using <code>--format</code>: | |||

$ sacct -j <jobid> | |||

$ sacct -j <jobid> --format=JobID,JobName,MaxRSS,Elapsed | |||

<!--T:153--> | |||

The output from <code>sacct</code> typically includes records labelled <code>.bat+</code> and <code>.ext+</code>, and possibly <code>.0, .1, .2, ...</code>. | |||

The batch step (<code>.bat+</code>) is your submission script - for many jobs that's where the main part of the work is done and where the resources are consumed. | |||

If you use <code>srun</code> in your submission script, that would create a <code>.0</code> step that would consume most of the resources. | |||

The extern (<code>.ext+</code>) step is basically prologue and epilogue and normally doesn't consume any significant resources. | |||

<!--T:73--> | |||

If a node fails while running a job, the job may be restarted. <code>sacct</code> will normally show you only the record for the last (presumably successful) run. If you wish to see all records related to a given job, add the <code>--duplicates</code> option. | |||

<!--T:52--> | |||

Use the MaxRSS accounting field to determine how much memory a job needed. The value returned will be the largest [https://en.wikipedia.org/wiki/Resident_set_size resident set size] for any of the tasks. If you want to know which task and node this occurred on, print the MaxRSSTask and MaxRSSNode fields also. | |||

<!--T:53--> | |||

The [https://slurm.schedmd.com/sstat.html sstat] command works on a running job much the same way that [https://slurm.schedmd.com/sacct.html sacct] works on a completed job. | |||

=== Attaching to a running job === <!--T:130--> | |||

It is possible to connect to the node running a job and execute new processes there. You might want to do this for troubleshooting or to monitor the progress of a job. | |||

<!--T:131--> | |||

Suppose you want to run the utility [https://developer.nvidia.com/nvidia-system-management-interface <code>nvidia-smi</code>] to monitor GPU usage on a node where you have a job running. The following command runs <code>watch</code> on the node assigned to the given job, which in turn runs <code>nvidia-smi</code> every 30 seconds, displaying the output on your terminal. | |||

<!--T:132--> | |||

$ srun --jobid 123456 --pty watch -n 30 nvidia-smi | |||

<!--T:133--> | |||

It is possible to launch multiple monitoring commands using [https://en.wikipedia.org/wiki/Tmux <code>tmux</code>]. The following command launches <code>htop</code> and <code>nvidia-smi</code> in separate panes to monitor the activity on a node assigned to the given job. | |||

<!--T:134--> | |||

$ srun --jobid 123456 --pty tmux new-session -d 'htop -u $USER' \; split-window -h 'watch nvidia-smi' \; attach | |||

<!--T:135--> | |||

Processes launched with <code>srun</code> share the resources with the job specified. You should therefore be careful not to launch processes that would use a significant portion of the resources allocated for the job. Using too much memory, for example, might result in the job being killed; using too many CPU cycles will slow down the job. | |||

<!--T:136--> | |||

<b>Noteː</b> The <code>srun</code> commands shown above work only to monitor a job submitted with <code>sbatch</code>. To monitor an interactive job, create multiple panes with <code>tmux</code> and start each process in its own pane. | |||

==Cancelling jobs== <!--T:37--> | ==Cancelling jobs== <!--T:37--> | ||

| Line 218: | Line 334: | ||

Use [https://slurm.schedmd.com/scancel.html scancel] with the job ID to cancel a job: | Use [https://slurm.schedmd.com/scancel.html scancel] with the job ID to cancel a job: | ||

<!--T:39--> | |||

scancel <jobid> | $ scancel <jobid> | ||

<!--T:40--> | <!--T:40--> | ||

You can also use it to cancel all your jobs, or all your pending jobs: | You can also use it to cancel all your jobs, or all your pending jobs: | ||

<!--T:41--> | |||

scancel -u | $ scancel -u $USER | ||

scancel -t PENDING -u < | $ scancel -t PENDING -u $USER | ||

== Resubmitting jobs for long-running computations == <!--T:74--> | |||

<!--T:75--> | |||

When a computation is going to require a long time to complete, so long that it cannot be done within the time limits on the system, | |||

the application you are running must support [[Points de contrôle/en|checkpointing]]. The application should be able to save its state to a file, called a >i>checkpoint file</i>, and | |||

then it should be able to restart and continue the computation from that saved state. | |||

<!--T:76--> | |||

For many users restarting a calculation will be rare and may be done manually, | |||

but some workflows require frequent restarts. | |||

In this case some kind of automation technique may be employed. | |||

<!--T:77--> | |||

Here are two recommended methods of automatic restarting: | |||

* Using SLURM <b>job arrays</b>. | |||

* Resubmitting from the end of the job script. | |||

<!--T:172--> | |||

Our [[Tutoriel Apprentissage machine/en|Machine Learning tutorial]] covers [[Tutoriel_Apprentissage_machine/en#Checkpointing_a_long-running_job|resubmitting for long machine learning jobs]]. | |||

=== Restarting using job arrays === <!--T:90--> | |||

<!--T:91--> | |||

Using the <code>--array=1-100%10</code> syntax one can submit a collection of identical jobs with the condition that only one job of them will run at any given time. | |||

The script should be written to ensure that the last checkpoint is always used for the next job. The number of restarts is fixed by the <code>--array</code> argument. | |||

<!--T:78--> | |||

Consider, for example, a molecular dynamics simulations that has to be run for 1 000 000 steps, and such simulation does not fit into the time limit on the cluster. | |||

We can split the simulation into 10 smaller jobs of 100 000 steps, one after another. | |||

<!--T:79--> | |||

An example of using a job array to restart a simulation: | |||

{{File | |||

|name=job_array_restart.sh | |||

|lang="sh" | |||

|contents= | |||

#!/bin/bash | |||

# --------------------------------------------------------------------- | |||

# SLURM script for a multi-step job on our clusters. | |||

# --------------------------------------------------------------------- | |||

#SBATCH --account=def-someuser | |||

#SBATCH --cpus-per-task=1 | |||

#SBATCH --time=0-10:00 | |||

#SBATCH --mem=100M | |||

#SBATCH --array=1-10%1 # Run a 10-job array, one job at a time. | |||

# --------------------------------------------------------------------- | |||

echo "Current working directory: `pwd`" | |||

echo "Starting run at: `date`" | |||

# --------------------------------------------------------------------- | |||

echo "" | |||

echo "Job Array ID / Job ID: $SLURM_ARRAY_JOB_ID / $SLURM_JOB_ID" | |||

echo "This is job $SLURM_ARRAY_TASK_ID out of $SLURM_ARRAY_TASK_COUNT jobs." | |||

echo "" | |||

# --------------------------------------------------------------------- | |||

# Run your simulation step here... | |||

<!--T:92--> | |||

if test -e state.cpt; then | |||

# There is a checkpoint file, restart; | |||

mdrun --restart state.cpt | |||

else | |||

# There is no checkpoint file, start a new simulation. | |||

mdrun | |||

fi | |||

<!--T:93--> | |||

# --------------------------------------------------------------------- | |||

echo "Job finished with exit code $? at: `date`" | |||

# --------------------------------------------------------------------- | |||

}} | |||

=== Resubmission from the job script === <!--T:94--> | |||

<!--T:95--> | |||

In this case one submits a job that runs the first chunk of the calculation and saves a checkpoint. | |||

Once the chunk is done but before the allocated run-time of the job has elapsed, | |||

the script checks if the end of the calculation has been reached. | |||

If the calculation is not yet finished, the script submits a copy of itself to continue working. | |||

<!--T:96--> | |||

An example of a job script with resubmission: | |||

{{File | |||

|name=job_resubmission.sh | |||

|lang="sh" | |||

|contents= | |||

#!/bin/bash | |||

# --------------------------------------------------------------------- | |||

# SLURM script for job resubmission on our clusters. | |||

# --------------------------------------------------------------------- | |||

#SBATCH --job-name=job_chain | |||

#SBATCH --account=def-someuser | |||

#SBATCH --cpus-per-task=1 | |||

#SBATCH --time=0-10:00 | |||

#SBATCH --mem=100M | |||

# --------------------------------------------------------------------- | |||

echo "Current working directory: `pwd`" | |||

echo "Starting run at: `date`" | |||

# --------------------------------------------------------------------- | |||

# Run your simulation step here... | |||

<!--T:100--> | |||

if test -e state.cpt; then | |||

# There is a checkpoint file, restart; | |||

mdrun --restart state.cpt | |||

else | |||

# There is no checkpoint file, start a new simulation. | |||

mdrun | |||

fi | |||

<!--T:101--> | |||

# Resubmit if not all work has been done yet. | |||

# You must define the function work_should_continue(). | |||

if work_should_continue; then | |||

sbatch ${BASH_SOURCE[0]} | |||

fi | |||

<!--T:102--> | |||

# --------------------------------------------------------------------- | |||

echo "Job finished with exit code $? at: `date`" | |||

# --------------------------------------------------------------------- | |||

}} | |||

<!--T:143--> | |||

<b>Please note:</b> The test to determine whether to submit a follow-up job, abbreviated as <code>work_should_continue</code> in the above example, should be a <i>positive test</i>. There may be a temptation to test for a stopping condition (e.g. is some convergence criterion met?) and submit a new job if the condition is <i>not</i> detected. But if some error arises that you didn't foresee, the stopping condition might never be met and your chain of jobs may continue indefinitely, doing nothing useful. | |||

== Automating job submission == <!--T:174--> | |||

As described earlier, [[#Array job|array jobs]] can be used to automate job submission. We provide a few other (more advanced) tools designed to facilitate running a large number of related serial, parallel, or GPU calculations. This practice is sometimes called <i>farming</i>, <i>serial farming</i>, or <i>task farming</i>. In addition to automating the workflow, these tools can also improve computational efficiency by bundling up many short computations into fewer tasks of longer duration. | |||

<!--T:175--> | |||

The following tools are available on our clusters: | |||

* [[META-Farm]] | |||

* [[GNU Parallel]] | |||

* [[GLOST]] | |||

=== Do not specify a partition === <!--T:177--> | |||

<!--T:178--> | |||

Certain software packages such as [https://github.com/alekseyzimin/masurca Masurca] operate by submitting jobs to Slurm automatically, and expect a partition to be specified for each job. This is in conflict with what we recommend, which is that you should allow the scheduler to assign a partition to your job based on the resources it requests. If you are using such a piece of software, you may configure the software to use <code>--partition=default</code>, which the script treats the same as not specifying a partition. | |||

== Cluster particularities == <!--T:154--> | |||

<!--T:155--> | |||

There are certain differences in the job scheduling policies from one of our clusters to another and these are summarized by tab in the following section: | |||

<!--T:156--> | |||

<tabs> | |||

<tab name="Beluga"> | |||

On Beluga, no jobs are permitted longer than 168 hours (7 days) and there is a limit of 1000 jobs, queued and running, per user. Production jobs should have a duration of at least an hour. | |||

</tab> | |||

<tab name="Cedar"> | |||

Jobs may not be submitted from directories on the /home filesystem on Cedar; the maximum duration for a job is 28 days. This is to reduce the load on that filesystem and improve the responsiveness for interactive work. If the command <code>readlink -f $(pwd) | cut -d/ -f2</code> returns <code>home</code>, you are not permitted to submit jobs from that directory. Transfer the files from that directory either to a /project or /scratch directory and submit the job from there. | |||

</tab> | |||

<!--T:179--> | |||

<tab name="Graham"> | |||

On Graham, no jobs are permitted longer than 168 hours (7 days) and there is a limit of 1000 jobs, queued and running, per user. Production jobs should have a duration of at least an hour. | |||

</tab> | |||

<!--T:180--> | |||

<tab name="Narval"> | |||

On Narval, no jobs are permitted longer than 168 hours (7 days) and there is a limit of 1000 jobs, queued and running, per user. Production jobs should have a duration of at least an hour. | |||

</tab> | |||

<!--T:181--> | |||

<tab name="Niagara"> | |||

<ul> | |||

<li><p>Scheduling is by node, so in multiples of 40-cores.</p></li> | |||

<li><p> Your job's maximum walltime is 24 hours.</p></li> | |||

<li><p>Jobs must write to your scratch or project directory (home is read-only on compute nodes).</p></li> | |||

<li><p>Compute nodes have no internet access.</p> | |||

<p>[[Data_Management_at_Niagara#Moving_data | Move your data]] to Niagara before you submit your job.</p></li></ul> | |||

</tab> | |||

</tabs> | |||

== Troubleshooting == <!--T:42--> | == Troubleshooting == <!--T:42--> | ||

==== Avoid hidden characters in job scripts ==== <!--T:43--> | ==== Avoid hidden characters in job scripts ==== <!--T:43--> | ||

Preparing a job script with a | Preparing a job script with a word processor instead of a text editor is a common cause of trouble. Best practice is to prepare your job script on the cluster using an editor such as nano, vim, or emacs. If you prefer to prepare or alter the script off-line, then: | ||

* '''Windows users:''' | * '''Windows users:''' | ||

** Use a text editor such as Notepad or [https://notepad-plus-plus.org/ Notepad++]. | ** Use a text editor such as Notepad or [https://notepad-plus-plus.org/ Notepad++]. | ||

** After uploading the script, use <code>dos2unix</code> to change Windows end-of-line characters to Linux end-of-line characters. | ** After uploading the script, use <code>dos2unix</code> to change Windows end-of-line characters to Linux end-of-line characters. | ||

* '''Mac users:''' | * '''Mac users:''' | ||

** Open a terminal window and use an | ** Open a terminal window and use an editor such as nano, vim, or emacs. | ||

==== Cancellation of jobs with dependency conditions which cannot be met ==== <!--T:109--> | |||

A job submitted with <code>--dependency=afterok:<jobid></code> is a <i>dependent job</i>. A dependent job will wait for the parent job to be completed. If the parent job fails (that is, ends with a non-zero exit code) the dependent job can never be scheduled and so will be automatically cancelled. See [https://slurm.schedmd.com/sbatch.html#OPT_dependency sbatch] for more on dependency. | |||

==== Job cannot load a module ==== <!--T:116--> | |||

It is possible to see an error such as: | |||

<!--T:117--> | |||

Lmod has detected the following error: These module(s) exist but cannot be | |||

loaded as requested: "<module-name>/<version>" | |||

Try: "module spider <module-name>/<version>" to see how to load the module(s). | |||

<!--T:118--> | |||

This can occur if the particular module has an unsatisfied prerequisite. For example | |||

<!--T:119--> | |||

<source lang="console"> | |||

$ module load gcc | |||

$ module load quantumespresso/6.1 | |||

Lmod has detected the following error: These module(s) exist but cannot be loaded as requested: "quantumespresso/6.1" | |||

Try: "module spider quantumespresso/6.1" to see how to load the module(s). | |||

$ module spider quantumespresso/6.1 | |||

<!--T:120--> | |||

----------------------------------------- | |||

quantumespresso: quantumespresso/6.1 | |||

------------------------------------------ | |||

Description: | |||

Quantum ESPRESSO is an integrated suite of computer codes for electronic-structure calculations and materials modeling at the nanoscale. It is based on density-functional theory, plane waves, and pseudopotentials (both | |||

norm-conserving and ultrasoft). | |||

<!--T:121--> | |||

Properties: | |||

Chemistry libraries/apps / Logiciels de chimie | |||

<!--T:122--> | |||

You will need to load all module(s) on any one of the lines below before the "quantumespresso/6.1" module is available to load. | |||

<!--T:123--> | |||

nixpkgs/16.09 intel/2016.4 openmpi/2.1.1 | |||

<!--T:124--> | |||

Help: | |||

<!--T:125--> | |||

Description | |||

=========== | |||

Quantum ESPRESSO is an integrated suite of computer codes | |||

for electronic-structure calculations and materials modeling at the nanoscale. | |||

It is based on density-functional theory, plane waves, and pseudopotentials | |||

(both norm-conserving and ultrasoft). | |||

<!--T:126--> | |||

More information | |||

================ | |||

- Homepage: http://www.pwscf.org/ | |||

</source> | |||

<!--T:127--> | |||

In this case adding the line <code>module load nixpkgs/16.09 intel/2016.4 openmpi/2.1.1</code> to your job script before loading quantumespresso/6.1 will solve the problem. | |||

==== Jobs inherit environment variables ==== <!--T:128--> | |||

By default a job will inherit the environment variables of the shell where the job was submitted. The [[Using modules|module]] command, which is used to make various software packages available, changes and sets environment variables. Changes will propagate to any job submitted from the shell and thus could affect the job's ability to load modules if there are missing prerequisites. It is best to include the line <code>module purge</code> in your job script before loading all the required modules to ensure a consistent state for each job submission and avoid changes made in your shell affecting your jobs. | |||

<!--T:152--> | |||

Inheriting environment settings from the submitting shell can sometimes lead to hard-to-diagnose problems. If you wish to suppress this inheritance, use the <code>--export=none</code> directive when submitting jobs. | |||

==== Job hangs / no output / incomplete output ==== <!--T:165--> | |||

<!--T:166--> | |||

Sometimes a submitted job writes no output to the log file for an extended period of time, looking like it is hanging. A common reason for this is the aggressive [[#Output_buffering|buffering]] performed by the Slurm scheduler, which will aggregate many output lines before flushing them to the log file. Often the output file will only be written after the job completes; and if the job is cancelled (or runs out of time), part of the output may be lost. If you wish to monitor the progress of your submitted job as it runs, consider running an [[#Interactive_jobs|interactive job]]. This is also a good way to find how much time your job needs. | |||

== Job status and priority == <!--T:103--> | |||

* For a discussion of how job priority is determined and how things like time limits may affect the scheduling of your jobs at Cedar and Graham, see [[Job scheduling policies]]. | |||

* If jobs ''within your research group'' are competing with one another, please see [[Managing_Slurm_accounts|Managing Slurm accounts]]. | |||

== Further reading == <!--T:44--> | == Further reading == <!--T:44--> | ||

* Comprehensive [https://slurm.schedmd.com/documentation.html documentation] is maintained by SchedMD, as well as some [https://slurm.schedmd.com/tutorials.html tutorials]. | * Comprehensive [https://slurm.schedmd.com/documentation.html documentation] is maintained by SchedMD, as well as some [https://slurm.schedmd.com/tutorials.html tutorials]. | ||

** [https://slurm.schedmd.com/sbatch.html sbatch] command options | ** [https://slurm.schedmd.com/sbatch.html sbatch] command options | ||

* There is also a [https://slurm.schedmd.com/rosetta.pdf "Rosetta stone"] mapping commands and directives from PBS/Torque, SGE, LSF, and LoadLeveler, to SLURM | * There is also a [https://slurm.schedmd.com/rosetta.pdf "Rosetta stone"] mapping commands and directives from PBS/Torque, SGE, LSF, and LoadLeveler, to SLURM. | ||

* Here is a text tutorial from [http://www.ceci-hpc.be/slurm_tutorial.html CÉCI], Belgium | * Here is a text tutorial from [http://www.ceci-hpc.be/slurm_tutorial.html CÉCI], Belgium | ||

* Here is a rather minimal text tutorial from [http://www.brightcomputing.com/blog/bid/174099/slurm-101-basic-slurm-usage-for-linux-clusters Bright Computing] | * Here is a rather minimal text tutorial from [http://www.brightcomputing.com/blog/bid/174099/slurm-101-basic-slurm-usage-for-linux-clusters Bright Computing] | ||

| Line 248: | Line 613: | ||

<!--T:48--> | <!--T:48--> | ||

[[Category:SLURM]] | [[Category:SLURM]] | ||

</translate> | </translate> | ||

Latest revision as of 14:19, 18 October 2024

This page is intended for the user who is already familiar with the concepts of job scheduling and job scripts, and who wants guidance on submitting jobs to our clusters. If you have not worked on a large shared computer cluster before, you should probably read What is a scheduler? first.

|

All jobs must be submitted via the scheduler!

|

On our clusters, the job scheduler is the Slurm Workload Manager. Comprehensive documentation for Slurm is maintained by SchedMD. If you are coming to Slurm from PBS/Torque, SGE, LSF, or LoadLeveler, you might find this table of corresponding commands useful.

Use sbatch to submit jobs[edit]

The command to submit a job is sbatch:

$ sbatch simple_job.sh

Submitted batch job 123456

A minimal Slurm job script looks like this:

#!/bin/bash

#SBATCH --time=00:15:00

#SBATCH --account=def-someuser

echo 'Hello, world!'

sleep 30

On general-purpose (GP) clusters, this job reserves 1 core and 256MB of memory for 15 minutes. On Niagara, this job reserves the whole node with all its memory.

Directives (or options) in the job script are prefixed with #SBATCH and must precede all executable commands. All available directives are described on the sbatch page. Our policies require that you supply at least a time limit (--time) for each job. You may also need to supply an account name (--account). See Accounts and projects below.

You can also specify directives as command-line arguments to sbatch. So for example,

$ sbatch --time=00:30:00 simple_job.sh

will submit the above job script with a time limit of 30 minutes. The acceptable time formats include "minutes", "minutes:seconds", "hours:minutes:seconds", "days-hours", "days-hours:minutes" and "days-hours:minutes:seconds". Please note that the time limit will strongly affect how quickly the job is started, since longer jobs are eligible to run on fewer nodes.

Please be cautious if you use a script to submit multiple Slurm jobs in a short time. Submitting thousands of jobs at a time can cause Slurm to become unresponsive to other users. Consider using an array job instead, or use sleep to space out calls to sbatch by one second or more.

Memory[edit]

Memory may be requested with --mem-per-cpu (memory per core) or --mem (memory per node). On general-purpose (GP) clusters, a default memory amount of 256 MB per core will be allocated unless you make some other request. On Niagara, only whole nodes are allocated along with all available memory, so a memory specification is not required there.

A common source of confusion comes from the fact that some memory on a node is not available to the job (reserved for the OS, etc.). The effect of this is that each node type has a maximum amount available to jobs; for instance, nominally "128G" nodes are typically configured to permit 125G of memory to user jobs. If you request more memory than a node-type provides, your job will be constrained to run on higher-memory nodes, which may be fewer in number.

Adding to this confusion, Slurm interprets K, M, G, etc., as binary prefixes, so --mem=125G is equivalent to --mem=128000M. See the Available memory column in the Node characteristics table for each GP cluster for the Slurm specification of the maximum memory you can request on each node: Béluga, Cedar, Graham, Narval.

Use squeue or sq to list jobs[edit]

The general command for checking the status of Slurm jobs is squeue, but by default it supplies information about all jobs in the system, not just your own. You can use the shorter sq to list only your own jobs:

$ sq

JOBID USER ACCOUNT NAME ST TIME_LEFT NODES CPUS GRES MIN_MEM NODELIST (REASON)

123456 smithj def-smithj simple_j R 0:03 1 1 (null) 4G cdr234 (None)

123457 smithj def-smithj bigger_j PD 2-00:00:00 1 16 (null) 16G (Priority)

The ST column of the output shows the status of each job. The two most common states are PD for pending or R for running.

If you want to know more about the output of sq or squeue, or learn how to change the output, see the online manual page for squeue. sq is a local customization.

Do not run sq or squeue from a script or program at high frequency (e.g. every few seconds). Responding to squeue adds load to Slurm, and may interfere with its performance or correct operation. See Email notification below for a much better way to learn when your job starts or ends.

Where does the output go?[edit]

By default the output is placed in a file named "slurm-", suffixed with the job ID number and ".out" (e.g. slurm-123456.out), in the directory from which the job was submitted.

Having the job ID as part of the file name is convenient for troubleshooting.

A different name or location can be specified if your workflow requires it by using the --output directive.

Certain replacement symbols can be used in a filename specified this way, such as the job ID number, the job name, or the job array task ID.

See the vendor documentation on sbatch for a complete list of replacement symbols and some examples of their use.

Error output will normally appear in the same file as standard output, just as it would if you were typing commands interactively. If you want to send the standard error channel (stderr) to a separate file, use --error.

Accounts and projects[edit]

Every job must have an associated account name corresponding to a Resource Allocation Project (RAP). If you are a member of only one account, the scheduler will automatically associate your jobs with that account.

If you receive one of the following messages when you submit a job, then you have access to more than one account:

You are associated with multiple _cpu allocations... Please specify one of the following accounts to submit this job:

You are associated with multiple _gpu allocations... Please specify one of the following accounts to submit this job:

In this case, use the --account directive to specify one of the accounts listed in the error message, e.g.:

#SBATCH --account=def-user-ab

To find out which account name corresponds

to a given Resource Allocation Project, log in to CCDB

and click on My Account -> My Resources and Allocations. You will see a list of all the projects

you are a member of. The string you should use with the --account for

a given project is under the column Group Name. Note that a Resource

Allocation Project may only apply to a specific cluster (or set of clusters) and therefore

may not be transferable from one cluster to another.

In the illustration below, jobs submitted with --account=def-fuenma will be accounted against RAP zhf-914-aa

If you plan to use one account consistently for all jobs, once you have determined the right account name you may find it convenient to set the following three environment variables in your ~/.bashrc file:

export SLURM_ACCOUNT=def-someuser export SBATCH_ACCOUNT=$SLURM_ACCOUNT export SALLOC_ACCOUNT=$SLURM_ACCOUNT

Slurm will use the value of SBATCH_ACCOUNT in place of the --account directive in the job script. Note that even if you supply an account name inside the job script, the environment variable takes priority. In order to override the environment variable, you must supply an account name as a command-line argument to sbatch.

SLURM_ACCOUNT plays the same role as SBATCH_ACCOUNT, but for the srun command instead of sbatch. The same idea holds for SALLOC_ACCOUNT.

Examples of job scripts[edit]

Serial job[edit]

A serial job is a job which only requests a single core. It is the simplest type of job. The "simple_job.sh" which appears above in Use sbatch to submit jobs is an example.

Array job[edit]

Also known as a task array, an array job is a way to submit a whole set of jobs with one command. The individual jobs in the array are distinguished by an environment variable, $SLURM_ARRAY_TASK_ID, which is set to a different value for each instance of the job. The following example will create 10 tasks, with values of $SLURM_ARRAY_TASK_ID ranging from 1 to 10:

#!/bin/bash

#SBATCH --account=def-someuser

#SBATCH --time=0-0:5

#SBATCH --array=1-10

./myapplication $SLURM_ARRAY_TASK_ID

For more examples, see Job arrays. See Job Array Support for detailed documentation.

Threaded or OpenMP job[edit]

This example script launches a single process with eight CPU cores. Bear in mind that for an application to use OpenMP it must be compiled with the appropriate flag, e.g. gcc -fopenmp ... or icc -openmp ...

#!/bin/bash

#SBATCH --account=def-someuser

#SBATCH --time=0-0:5

#SBATCH --cpus-per-task=8

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK

./ompHello

MPI job[edit]

This example script launches four MPI processes, each with 1024 MB of memory. The run time is limited to 5 minutes.

#!/bin/bash

#SBATCH --account=def-someuser

#SBATCH --ntasks=4 # number of MPI processes

#SBATCH --mem-per-cpu=1024M # memory; default unit is megabytes

#SBATCH --time=0-00:05 # time (DD-HH:MM)

srun ./mpi_program # mpirun or mpiexec also work

Large MPI jobs, specifically those which can efficiently use whole nodes, should use --nodes and --ntasks-per-node instead of --ntasks. Hybrid MPI/threaded jobs are also possible. For more on these and other options relating to distributed parallel jobs, see Advanced MPI scheduling.

For more on writing and running parallel programs with OpenMP, see OpenMP.

GPU job[edit]

There are many options involved in requesting GPUs because

- the GPU-equipped nodes at Cedar and Graham have different configurations,

- there are two different configurations at Cedar, and

- there are different policies for the different Cedar GPU nodes.

Please see Using GPUs with Slurm for a discussion and examples of how to schedule various job types on the available GPU resources.

Interactive jobs[edit]

Though batch submission is the most common and most efficient way to take advantage of our clusters, interactive jobs are also supported. These can be useful for things like:

- Data exploration at the command line

- Interactive console tools like R and iPython

- Significant software development, debugging, or compiling

You can start an interactive session on a compute node with salloc. In the following example we request one task, which corresponds to one CPU cores and 3 GB of memory, for an hour:

$ salloc --time=1:0:0 --mem-per-cpu=3G --ntasks=1 --account=def-someuser salloc: Granted job allocation 1234567 $ ... # do some work $ exit # terminate the allocation salloc: Relinquishing job allocation 1234567

It is also possible to run graphical programs interactively on a compute node by adding the --x11 flag to your salloc command. In order for this to work, you must first connect to the cluster with X11 forwarding enabled (see the SSH page for instructions on how to do that). Note that an interactive job with a duration of three hours or less will likely start very soon after submission as we have dedicated test nodes for jobs of this duration. Interactive jobs that request more than three hours run on the cluster's regular set of nodes and may wait for many hours or even days before starting, at an unpredictable (and possibly inconvenient) hour.

Monitoring jobs[edit]

Current jobs[edit]

By default squeue will show all the jobs the scheduler is managing at the moment. It will run much faster if you ask only about your own jobs with

$ squeue -u $USER

You can also use the utility sq to do the same thing with less typing.

You can show only running jobs, or only pending jobs:

$ squeue -u <username> -t RUNNING $ squeue -u <username> -t PENDING

You can show detailed information for a specific job with scontrol:

$ scontrol show job -dd <jobid>

Do not run squeue from a script or program at high frequency (e.g., every few seconds). Responding to squeue adds load to Slurm and may interfere with its performance or correct operation.

Email notification[edit]

You can ask to be notified by email of certain job conditions by supplying options to sbatch:

#SBATCH --mail-user=your.email@example.com #SBATCH --mail-type=ALL

Please do not turn on these options unless you are going to read the emails they generate! We occasionally have email service providers (Google, Yahoo, etc) restrict the flow of mail from our domains because one user is generating a huge volume of unnecessary emails via these options.

For a complete list of the options for --mail-type see SchedMD's documentation.

Output buffering[edit]

Output from a non-interactive Slurm job is normally buffered, which means that there is usually a delay between when data is written by the job and when you can see the output on a login node. Depending on the application, you are running and the load on the filesystem, this delay can range from less than a second to many minutes, or until the job completes.

There are methods to reduce or eliminate the buffering, but we do not recommend using them because buffering is vital to preserving the overall performance of the filesystem. If you need to monitor the output from a job in real time, we recommend you run an interactive job as described above.

Completed jobs[edit]

Get a short summary of the CPU and memory efficiency of a job with seff:

$ seff 12345678 Job ID: 12345678 Cluster: cedar User/Group: jsmith/jsmith State: COMPLETED (exit code 0) Cores: 1 CPU Utilized: 02:48:58 CPU Efficiency: 99.72% of 02:49:26 core-walltime Job Wall-clock time: 02:49:26 Memory Utilized: 213.85 MB Memory Efficiency: 0.17% of 125.00 GB

Find more detailed information about a completed job with sacct, and optionally, control what it prints using --format:

$ sacct -j <jobid> $ sacct -j <jobid> --format=JobID,JobName,MaxRSS,Elapsed

The output from sacct typically includes records labelled .bat+ and .ext+, and possibly .0, .1, .2, ....

The batch step (.bat+) is your submission script - for many jobs that's where the main part of the work is done and where the resources are consumed.

If you use srun in your submission script, that would create a .0 step that would consume most of the resources.

The extern (.ext+) step is basically prologue and epilogue and normally doesn't consume any significant resources.

If a node fails while running a job, the job may be restarted. sacct will normally show you only the record for the last (presumably successful) run. If you wish to see all records related to a given job, add the --duplicates option.

Use the MaxRSS accounting field to determine how much memory a job needed. The value returned will be the largest resident set size for any of the tasks. If you want to know which task and node this occurred on, print the MaxRSSTask and MaxRSSNode fields also.

The sstat command works on a running job much the same way that sacct works on a completed job.

Attaching to a running job[edit]

It is possible to connect to the node running a job and execute new processes there. You might want to do this for troubleshooting or to monitor the progress of a job.

Suppose you want to run the utility nvidia-smi to monitor GPU usage on a node where you have a job running. The following command runs watch on the node assigned to the given job, which in turn runs nvidia-smi every 30 seconds, displaying the output on your terminal.

$ srun --jobid 123456 --pty watch -n 30 nvidia-smi

It is possible to launch multiple monitoring commands using tmux. The following command launches htop and nvidia-smi in separate panes to monitor the activity on a node assigned to the given job.

$ srun --jobid 123456 --pty tmux new-session -d 'htop -u $USER' \; split-window -h 'watch nvidia-smi' \; attach

Processes launched with srun share the resources with the job specified. You should therefore be careful not to launch processes that would use a significant portion of the resources allocated for the job. Using too much memory, for example, might result in the job being killed; using too many CPU cycles will slow down the job.

Noteː The srun commands shown above work only to monitor a job submitted with sbatch. To monitor an interactive job, create multiple panes with tmux and start each process in its own pane.

Cancelling jobs[edit]

Use scancel with the job ID to cancel a job:

$ scancel <jobid>

You can also use it to cancel all your jobs, or all your pending jobs:

$ scancel -u $USER $ scancel -t PENDING -u $USER

Resubmitting jobs for long-running computations[edit]

When a computation is going to require a long time to complete, so long that it cannot be done within the time limits on the system, the application you are running must support checkpointing. The application should be able to save its state to a file, called a >i>checkpoint file, and then it should be able to restart and continue the computation from that saved state.

For many users restarting a calculation will be rare and may be done manually, but some workflows require frequent restarts. In this case some kind of automation technique may be employed.

Here are two recommended methods of automatic restarting:

- Using SLURM job arrays.

- Resubmitting from the end of the job script.

Our Machine Learning tutorial covers resubmitting for long machine learning jobs.

Restarting using job arrays[edit]

Using the --array=1-100%10 syntax one can submit a collection of identical jobs with the condition that only one job of them will run at any given time.

The script should be written to ensure that the last checkpoint is always used for the next job. The number of restarts is fixed by the --array argument.

Consider, for example, a molecular dynamics simulations that has to be run for 1 000 000 steps, and such simulation does not fit into the time limit on the cluster. We can split the simulation into 10 smaller jobs of 100 000 steps, one after another.

An example of using a job array to restart a simulation:

#!/bin/bash

# ---------------------------------------------------------------------

# SLURM script for a multi-step job on our clusters.

# ---------------------------------------------------------------------

#SBATCH --account=def-someuser

#SBATCH --cpus-per-task=1

#SBATCH --time=0-10:00

#SBATCH --mem=100M

#SBATCH --array=1-10%1 # Run a 10-job array, one job at a time.

# ---------------------------------------------------------------------

echo "Current working directory: `pwd`"

echo "Starting run at: `date`"

# ---------------------------------------------------------------------

echo ""

echo "Job Array ID / Job ID: $SLURM_ARRAY_JOB_ID / $SLURM_JOB_ID"

echo "This is job $SLURM_ARRAY_TASK_ID out of $SLURM_ARRAY_TASK_COUNT jobs."

echo ""

# ---------------------------------------------------------------------

# Run your simulation step here...

if test -e state.cpt; then

# There is a checkpoint file, restart;

mdrun --restart state.cpt

else

# There is no checkpoint file, start a new simulation.

mdrun

fi

# ---------------------------------------------------------------------

echo "Job finished with exit code $? at: `date`"

# ---------------------------------------------------------------------

Resubmission from the job script[edit]

In this case one submits a job that runs the first chunk of the calculation and saves a checkpoint. Once the chunk is done but before the allocated run-time of the job has elapsed, the script checks if the end of the calculation has been reached. If the calculation is not yet finished, the script submits a copy of itself to continue working.

An example of a job script with resubmission:

#!/bin/bash

# ---------------------------------------------------------------------

# SLURM script for job resubmission on our clusters.

# ---------------------------------------------------------------------

#SBATCH --job-name=job_chain

#SBATCH --account=def-someuser

#SBATCH --cpus-per-task=1

#SBATCH --time=0-10:00

#SBATCH --mem=100M

# ---------------------------------------------------------------------

echo "Current working directory: `pwd`"

echo "Starting run at: `date`"

# ---------------------------------------------------------------------

# Run your simulation step here...

if test -e state.cpt; then

# There is a checkpoint file, restart;

mdrun --restart state.cpt

else

# There is no checkpoint file, start a new simulation.

mdrun

fi

# Resubmit if not all work has been done yet.

# You must define the function work_should_continue().

if work_should_continue; then

sbatch ${BASH_SOURCE[0]}

fi

# ---------------------------------------------------------------------

echo "Job finished with exit code $? at: `date`"

# ---------------------------------------------------------------------

Please note: The test to determine whether to submit a follow-up job, abbreviated as work_should_continue in the above example, should be a positive test. There may be a temptation to test for a stopping condition (e.g. is some convergence criterion met?) and submit a new job if the condition is not detected. But if some error arises that you didn't foresee, the stopping condition might never be met and your chain of jobs may continue indefinitely, doing nothing useful.

Automating job submission[edit]

As described earlier, array jobs can be used to automate job submission. We provide a few other (more advanced) tools designed to facilitate running a large number of related serial, parallel, or GPU calculations. This practice is sometimes called farming, serial farming, or task farming. In addition to automating the workflow, these tools can also improve computational efficiency by bundling up many short computations into fewer tasks of longer duration.

The following tools are available on our clusters:

Do not specify a partition[edit]

Certain software packages such as Masurca operate by submitting jobs to Slurm automatically, and expect a partition to be specified for each job. This is in conflict with what we recommend, which is that you should allow the scheduler to assign a partition to your job based on the resources it requests. If you are using such a piece of software, you may configure the software to use --partition=default, which the script treats the same as not specifying a partition.

Cluster particularities[edit]

There are certain differences in the job scheduling policies from one of our clusters to another and these are summarized by tab in the following section:

On Beluga, no jobs are permitted longer than 168 hours (7 days) and there is a limit of 1000 jobs, queued and running, per user. Production jobs should have a duration of at least an hour.

Jobs may not be submitted from directories on the /home filesystem on Cedar; the maximum duration for a job is 28 days. This is to reduce the load on that filesystem and improve the responsiveness for interactive work. If the command readlink -f $(pwd) | cut -d/ -f2 returns home, you are not permitted to submit jobs from that directory. Transfer the files from that directory either to a /project or /scratch directory and submit the job from there.

On Graham, no jobs are permitted longer than 168 hours (7 days) and there is a limit of 1000 jobs, queued and running, per user. Production jobs should have a duration of at least an hour.

On Narval, no jobs are permitted longer than 168 hours (7 days) and there is a limit of 1000 jobs, queued and running, per user. Production jobs should have a duration of at least an hour.

Scheduling is by node, so in multiples of 40-cores.

Your job's maximum walltime is 24 hours.

Jobs must write to your scratch or project directory (home is read-only on compute nodes).

Compute nodes have no internet access.

Move your data to Niagara before you submit your job.

Troubleshooting[edit]

[edit]

Preparing a job script with a word processor instead of a text editor is a common cause of trouble. Best practice is to prepare your job script on the cluster using an editor such as nano, vim, or emacs. If you prefer to prepare or alter the script off-line, then:

- Windows users:

- Use a text editor such as Notepad or Notepad++.

- After uploading the script, use

dos2unixto change Windows end-of-line characters to Linux end-of-line characters.

- Mac users:

- Open a terminal window and use an editor such as nano, vim, or emacs.

Cancellation of jobs with dependency conditions which cannot be met[edit]

A job submitted with --dependency=afterok:<jobid> is a dependent job. A dependent job will wait for the parent job to be completed. If the parent job fails (that is, ends with a non-zero exit code) the dependent job can never be scheduled and so will be automatically cancelled. See sbatch for more on dependency.

Job cannot load a module[edit]

It is possible to see an error such as:

Lmod has detected the following error: These module(s) exist but cannot be loaded as requested: "<module-name>/<version>" Try: "module spider <module-name>/<version>" to see how to load the module(s).

This can occur if the particular module has an unsatisfied prerequisite. For example

$ module load gcc

$ module load quantumespresso/6.1

Lmod has detected the following error: These module(s) exist but cannot be loaded as requested: "quantumespresso/6.1"

Try: "module spider quantumespresso/6.1" to see how to load the module(s).

$ module spider quantumespresso/6.1

-----------------------------------------

quantumespresso: quantumespresso/6.1

------------------------------------------

Description:

Quantum ESPRESSO is an integrated suite of computer codes for electronic-structure calculations and materials modeling at the nanoscale. It is based on density-functional theory, plane waves, and pseudopotentials (both

norm-conserving and ultrasoft).

Properties:

Chemistry libraries/apps / Logiciels de chimie

You will need to load all module(s) on any one of the lines below before the "quantumespresso/6.1" module is available to load.

nixpkgs/16.09 intel/2016.4 openmpi/2.1.1

Help:

Description

===========

Quantum ESPRESSO is an integrated suite of computer codes

for electronic-structure calculations and materials modeling at the nanoscale.

It is based on density-functional theory, plane waves, and pseudopotentials

(both norm-conserving and ultrasoft).

More information

================

- Homepage: http://www.pwscf.org/

In this case adding the line module load nixpkgs/16.09 intel/2016.4 openmpi/2.1.1 to your job script before loading quantumespresso/6.1 will solve the problem.

Jobs inherit environment variables[edit]

By default a job will inherit the environment variables of the shell where the job was submitted. The module command, which is used to make various software packages available, changes and sets environment variables. Changes will propagate to any job submitted from the shell and thus could affect the job's ability to load modules if there are missing prerequisites. It is best to include the line module purge in your job script before loading all the required modules to ensure a consistent state for each job submission and avoid changes made in your shell affecting your jobs.

Inheriting environment settings from the submitting shell can sometimes lead to hard-to-diagnose problems. If you wish to suppress this inheritance, use the --export=none directive when submitting jobs.

Job hangs / no output / incomplete output[edit]

Sometimes a submitted job writes no output to the log file for an extended period of time, looking like it is hanging. A common reason for this is the aggressive buffering performed by the Slurm scheduler, which will aggregate many output lines before flushing them to the log file. Often the output file will only be written after the job completes; and if the job is cancelled (or runs out of time), part of the output may be lost. If you wish to monitor the progress of your submitted job as it runs, consider running an interactive job. This is also a good way to find how much time your job needs.

Job status and priority[edit]

- For a discussion of how job priority is determined and how things like time limits may affect the scheduling of your jobs at Cedar and Graham, see Job scheduling policies.

- If jobs within your research group are competing with one another, please see Managing Slurm accounts.

Further reading[edit]

- Comprehensive documentation is maintained by SchedMD, as well as some tutorials.

- sbatch command options

- There is also a "Rosetta stone" mapping commands and directives from PBS/Torque, SGE, LSF, and LoadLeveler, to SLURM.

- Here is a text tutorial from CÉCI, Belgium

- Here is a rather minimal text tutorial from Bright Computing