CephFS: Difference between revisions

No edit summary |

|||

| Line 34: | Line 34: | ||

<br clear=all> | <br clear=all> | ||

; Create an access rule to generate | ; Create an access rule to generate access key. | ||

: In <i>Project --> Share --> Shares --> Actions</i> column, select <i>Manage Rules</i> from the drop-down menu. | : In <i>Project --> Share --> Shares --> Actions</i> column, select <i>Manage Rules</i> from the drop-down menu. | ||

: Click on the <i>+Add Rule</i> button (right of page). | : Click on the <i>+Add Rule</i> button (right of the page). | ||

: <i>Access Type</i> = cephx | : <i>Access Type</i> = cephx | ||

: <i>Access Level</i> = select <i>read-write</i> or <i>read-only</i> (you can create multiple rules for either access level if required) | : <i>Access Level</i> = select <i>read-write</i> or <i>read-only</i> (you can create multiple rules for either access level if required) | ||

| Line 45: | Line 45: | ||

; Note the share details which you will need later. | ; Note the share details which you will need later. | ||

: In <i>Project --> Share --> Shares</i>, click on the name of the share. | : In <i>Project --> Share --> Shares</i>, click on the name of the share. | ||

: In the <i>Share Overview</i>, note the three | : In the <i>Share Overview</i>, note the three elements circled in red in the "Properly configured" image: <i>Path</i>, which will be used in the mount command on the VM, the <i>Access Rules</i>, which will be the client name and the <i>Access Key</i> that will let the VM's client connect. | ||

== | == Attach the CephFS network to your VM == <!--T:6--> | ||

=== On Arbutus === | === On Arbutus === | ||

On <code>Arbutus</code> the cephFS network is already exposed to your VM, there is nothing to do here, '''[[CephFS#VM_configuration:_install_and_configure_CephFS_client|go | On <code>Arbutus</code> the cephFS network is already exposed to your VM, there is nothing to do here, '''[[CephFS#VM_configuration:_install_and_configure_CephFS_client|go to VM configuration section]]'''. | ||

=== On SD4H/Juno === | === On SD4H/Juno === | ||

On <code>SD4H/Juno</code> the cephFS network, there you need to | On <code>SD4H/Juno</code> the cephFS network, there you need to explicitly attack the network to the VM. | ||

;With the Web Gui | ;With the Web Gui | ||

For each | For each VM you need to attach, select <i>Instance --> Action --> Attach interface</i> select the CephFS-Network, leave the Fixed IP Address box empty. | ||

[[File:Select CephFS Network.png|750px|thumb|left|]] | [[File:Select CephFS Network.png|750px|thumb|left|]] | ||

<br clear=all> | <br clear=all> | ||

;With the [[OpenStack_command_line_clients|Openstack client]] | ;With the [[OpenStack_command_line_clients|Openstack client]] | ||

List the | List the servers and select the id of the server you need to attach to the CephFS | ||

<source lang='bash'> | <source lang='bash'> | ||

$ openstack server list | $ openstack server list | ||

| Line 72: | Line 72: | ||

</source> | </source> | ||

Select | Select the ID of the VM you want to attach, will pick the first one here and run | ||

<source lang='bash'> | <source lang='bash'> | ||

$ openstack server add network 1b2a3c21-c1b4-42b8-9016-d96fc8406e04 CephFS-Network | $ openstack server add network 1b2a3c21-c1b4-42b8-9016-d96fc8406e04 CephFS-Network | ||

| Line 89: | Line 89: | ||

== VM configuration: install and configure CephFS client == <!--T:8--> | == VM configuration: install and configure CephFS client == <!--T:8--> | ||

;Install the required packages | ;Install the required packages for Red Hat family (RHEL, CentOS, Fedora, Rocky, Alma ). | ||

Check the available releases here https://download.ceph.com/ and look for recent <code>rpm-*</code> directories, quincy is the right/latest stable release at the time of this writing. The compatible distro are listed here | Check the available releases here https://download.ceph.com/ and look for recent <code>rpm-*</code> directories, quincy is the right/latest stable release at the time of this writing. The compatible distro are listed here | ||

https://download.ceph.com/rpm-quincy/, we will show the full installation for <code>el8</code>. | https://download.ceph.com/rpm-quincy/, we will show the full installation for <code>el8</code>. | ||

| Line 124: | Line 124: | ||

}} | }} | ||

The epel repo also | The epel repo also needs to be in place | ||

sudo dnf install epel-release | sudo dnf install epel-release | ||

You can now install the ceph lib, cephfs client and other | You can now install the ceph lib, cephfs client and other dependencies: | ||

sudo dnf install -y libcephfs2 python3-cephfs ceph-common python3-ceph-argparse | sudo dnf install -y libcephfs2 python3-cephfs ceph-common python3-ceph-argparse | ||

;Install the required packages | ;Install the required packages for Debian family (Debian, Ubuntu, Mint, etc.): | ||

You can get the repository one you have figured out your distro <code>{codename}</code> with <code>lsb_release -sc</code> | You can get the repository one you have figured out your distro <code>{codename}</code> with <code>lsb_release -sc</code> | ||

<source lang='bash'> | <source lang='bash'> | ||

| Line 139: | Line 139: | ||

=== Configure ceph client: === | === Configure ceph client: === | ||

Once the client is | Once the client is installed, you can create a <code>ceph.conf</code> file, note the different Mon host for the different cloud. | ||

<tabs> | <tabs> | ||

<tab name="Arbutus"> | <tab name="Arbutus"> | ||

| Line 173: | Line 173: | ||

</tabs> | </tabs> | ||

You can find the monitor information in the share details <i>Path</i> field that will be | You can find the monitor information in the share details <i>Path</i> field that will be used to mount the volume. If the value of the web page is different than what is seen here, it means that the wiki page is out of date. | ||

You | You also need to put your client name and secret in the <code>ceph.keyring</code> file | ||

{{File | {{File | ||

| Line 185: | Line 185: | ||

}} | }} | ||

Again, the | Again, the access key and client name (here MyCephFS-RW) are found under access rules on your project web page, hereL Project --> Share --> Shares, click on the name of the share. | ||

| Line 197: | Line 197: | ||

mkdir /cephfs | mkdir /cephfs | ||

</source> | </source> | ||

:Via kernel mount using the ceph driver. You can do a permanent mount by adding the | :Via kernel mount using the ceph driver. You can do a permanent mount by adding the following in the VM fstab | ||

<tabs> | <tabs> | ||

<tab name="Arbutus"> | <tab name="Arbutus"> | ||

| Line 218: | Line 218: | ||

'''Note:''' | '''Note:''' | ||

There is a non- | There is a non-standard/funky <code>:</code> before the device path, it is not a typo! | ||

The mount options are different on different systems. | The mount options are different on different systems. | ||

The namespace option is | The namespace option is required for SD4H/Juno while other options are performance tweaks. | ||

;It can also be done from the command line: | ;It can also be done from the command line: | ||

| Line 244: | Line 244: | ||

sudo dnf install ceph-fuse | sudo dnf install ceph-fuse | ||

</source> | </source> | ||

Let the fuse mount be accessible in userspace by uncommenting <code>user_allow_other</code> in the <code>fuse.conf</code> file | Let the fuse mount be accessible in userspace by uncommenting <code>user_allow_other</code> in the <code>fuse.conf</code> file. | ||

{{File | {{File | ||

| Line 254: | Line 254: | ||

}} | }} | ||

You can now mount cephFS in a | You can now mount cephFS in a user’s home: | ||

<source lang="bash"> | <source lang="bash"> | ||

mkdir ~/my_cephfs | mkdir ~/my_cephfs | ||

ceph-fuse my_cephfs/ --id=MyCephFS-RW --conf=~/ceph.conf --keyring=~/ceph.keyring --client-mountpoint=/volumes/_nogroup/f6cb8f06-f0a4-4b88-b261-f8bd6b03582c | ceph-fuse my_cephfs/ --id=MyCephFS-RW --conf=~/ceph.conf --keyring=~/ceph.keyring --client-mountpoint=/volumes/_nogroup/f6cb8f06-f0a4-4b88-b261-f8bd6b03582c | ||

</source> | </source> | ||

Note that the client name is here the <code>--id</code>. The <code>ceph.conf</code> and <code>ceph.keyring</code> | Note that the client name is here the <code>--id</code>. The <code>ceph.conf</code> and <code>ceph.keyring</code> content are exactly the same as for the ceph kernel mount. | ||

=Notes= <!--T:17--> | =Notes= <!--T:17--> | ||

| Line 265: | Line 265: | ||

<!--T:18--> | <!--T:18--> | ||

* A particular share can have more than one user key provisioned for it. | * A particular share can have more than one user key provisioned for it. | ||

** This allows a more granular access to the filesystem, for example if you needed some hosts to only access the filesystem in a read-only capacity. | ** This allows a more granular access to the filesystem, for example, if you needed some hosts to only access the filesystem in a read-only capacity. | ||

** If you have multiple keys for a share, you can add the extra keys to your host and modify the above mounting procedure. | ** If you have multiple keys for a share, you can add the extra keys to your host and modify the above mounting procedure. | ||

* This service is not available to hosts outside of the OpenStack cluster. | * This service is not available to hosts outside of the OpenStack cluster. | ||

Revision as of 22:50, 29 February 2024

CephFS provides a common filesystem that can be shared amongst multiple OpenStack VM hosts. Access to the service is granted via requests to cloud@tech.alliancecan.ca.

This is a fairly technical procedure that assumes basic Linux skills for creating/editing files, setting permissions, and creating mount points. For assistance in setting up this service, write to cloud@tech.alliancecan.ca.

Procedure

If you do not already have a quota for the service, you will need to request this through cloud@tech.alliancecan.ca. In your request please provide the following:

- OpenStack project name

- amount of quota required in GB

- number of shares required

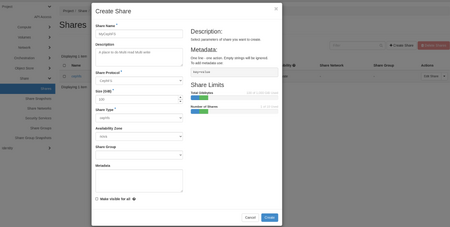

- Create the share.

- In Project --> Share --> Shares, click on +Create Share.

- Share Name = enter a name that identifies your project (e.g. project-name-shareName)

- Share Protocol = CephFS

- Size = size you need for this share

- Share Type = cephfs

- Availability Zone = nova

- Do not check Make visible for all, otherwise the share will be accessible by all users in all projects.

- Click on the Create button.

- Create an access rule to generate access key.

- In Project --> Share --> Shares --> Actions column, select Manage Rules from the drop-down menu.

- Click on the +Add Rule button (right of the page).

- Access Type = cephx

- Access Level = select read-write or read-only (you can create multiple rules for either access level if required)

- Access To = select a key name that describes the key, this name is important, it will be used in the cephfs clien config on the VM, we will use MyCephFS-RW on this page.

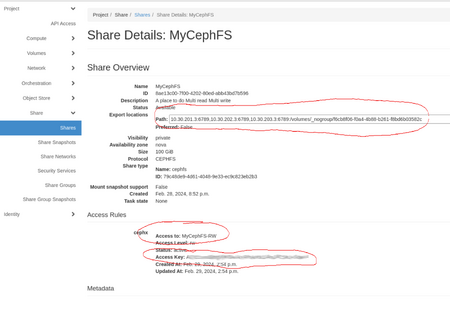

- Note the share details which you will need later.

- In Project --> Share --> Shares, click on the name of the share.

- In the Share Overview, note the three elements circled in red in the "Properly configured" image: Path, which will be used in the mount command on the VM, the Access Rules, which will be the client name and the Access Key that will let the VM's client connect.

Attach the CephFS network to your VM

On Arbutus

On Arbutus the cephFS network is already exposed to your VM, there is nothing to do here, go to VM configuration section.

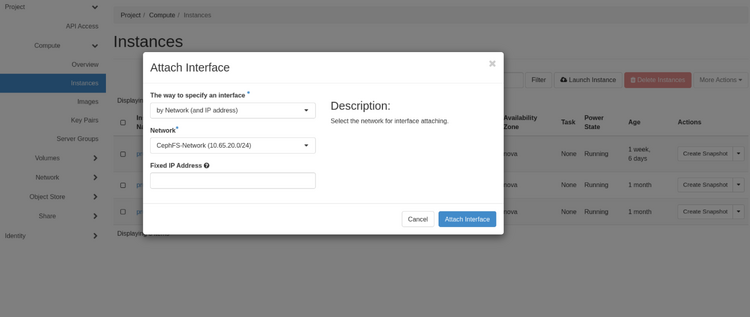

On SD4H/Juno

On SD4H/Juno the cephFS network, there you need to explicitly attack the network to the VM.

- With the Web Gui

For each VM you need to attach, select Instance --> Action --> Attach interface select the CephFS-Network, leave the Fixed IP Address box empty.

- With the Openstack client

List the servers and select the id of the server you need to attach to the CephFS

$ openstack server list

+--------------------------------------+--------------+--------+-------------------------------------------+--------------------------+----------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+--------------+--------+-------------------------------------------+--------------------------+----------+

| 1b2a3c21-c1b4-42b8-9016-d96fc8406e04 | prune-dtn1 | ACTIVE | test_network=172.16.1.86, 198.168.189.3 | N/A (booted from volume) | ha4-15gb |

| 0c6df8ea-9d6a-43a9-8f8b-85eb64ca882b | prune-mgmt1 | ACTIVE | test_network=172.16.1.64 | N/A (booted from volume) | ha4-15gb |

| 2b7ebdfa-ee58-4919-bd12-647a382ec9f6 | prune-login1 | ACTIVE | test_network=172.16.1.111, 198.168.189.82 | N/A (booted from volume) | ha4-15gb |

+--------------------------------------+--------------+--------+----------------------------------------------+--------------------------+----------+

Select the ID of the VM you want to attach, will pick the first one here and run

$ openstack server add network 1b2a3c21-c1b4-42b8-9016-d96fc8406e04 CephFS-Network

$ openstack server list

+--------------------------------------+--------------+--------+---------------------------------------------------------------------+--------------------------+----------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+--------------+--------+---------------------------------------------------------------------+--------------------------+----------+

| 1b2a3c21-c1b4-42b8-9016-d96fc8406e04 | prune-dtn1 | ACTIVE | CephFS-Network=10.65.20.71; test_network=172.16.1.86, 198.168.189.3 | N/A (booted from volume) | ha4-15gb |

| 0c6df8ea-9d6a-43a9-8f8b-85eb64ca882b | prune-mgmt1 | ACTIVE | test_network=172.16.1.64 | N/A (booted from volume) | ha4-15gb |

| 2b7ebdfa-ee58-4919-bd12-647a382ec9f6 | prune-login1 | ACTIVE | test_network=172.16.1.111, 198.168.189.82 | N/A (booted from volume) | ha4-15gb |

+--------------------------------------+--------------+--------+------------------------------------------------------------------------+--------------------------+----------+

We can see that the CephFS network is attached to the first VM.

VM configuration: install and configure CephFS client

- Install the required packages for Red Hat family (RHEL, CentOS, Fedora, Rocky, Alma ).

Check the available releases here https://download.ceph.com/ and look for recent rpm-* directories, quincy is the right/latest stable release at the time of this writing. The compatible distro are listed here

https://download.ceph.com/rpm-quincy/, we will show the full installation for el8.

Install relevant repositories for access to ceph client packages:

[Ceph]

name=Ceph packages for $basearch

baseurl=http://download.ceph.com/rpm-quincy/el8/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-quincy/el8/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=http://download.ceph.com/rpm-quincy/el8/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

The epel repo also needs to be in place

sudo dnf install epel-release

You can now install the ceph lib, cephfs client and other dependencies:

sudo dnf install -y libcephfs2 python3-cephfs ceph-common python3-ceph-argparse

- Install the required packages for Debian family (Debian, Ubuntu, Mint, etc.)

You can get the repository one you have figured out your distro {codename} with lsb_release -sc

sudo apt-add-repository 'deb https://download.ceph.com/debian-quincy/ {codename} main'

Configure ceph client:

Once the client is installed, you can create a ceph.conf file, note the different Mon host for the different cloud.

[global]

admin socket = /var/run/ceph/$cluster-$name-$pid.asok

client reconnect stale = true

debug client = 0/2

fuse big writes = true

mon host = 10.30.201.3:6789,10.30.202.3:6789,10.30.203.3:6789

[client]

quota = true

[global]

admin socket = /var/run/ceph/$cluster-$name-$pid.asok

client reconnect stale = true

debug client = 0/2

fuse big writes = true

mon host = 10.65.0.10:6789,10.65.0.12:6789,10.65.0.11:6789

[client]

quota = true

You can find the monitor information in the share details Path field that will be used to mount the volume. If the value of the web page is different than what is seen here, it means that the wiki page is out of date.

You also need to put your client name and secret in the ceph.keyring file

[client.MyCephFS-RW]

key = <access Key>

Again, the access key and client name (here MyCephFS-RW) are found under access rules on your project web page, hereL Project --> Share --> Shares, click on the name of the share.

- Retrieve the connection information from the share page for your connection

- Open up the share details by clicking the name of the share in the Shares page.

- Copy the entire path of the share for mounting the filesystem.

- Mount the filesystem

- Create a mount point directory somewhere in your host (

/cephfs, is used here)

mkdir /cephfs

- Via kernel mount using the ceph driver. You can do a permanent mount by adding the following in the VM fstab

:/volumes/_nogroup/f6cb8f06-f0a4-4b88-b261-f8bd6b03582c /cephfs/ ceph name=MyCephFS-RW 0 2

:/volumes/_nogroup/f6cb8f06-f0a4-4b88-b261-f8bd6b03582c /cephfs/ ceph name=MyCephFS-RW,mds_namespace=cephfs_4_2,x-systemd.device-timeout=30,x-systemd.mount-timeout=30,noatime,_netdev,rw 0 2

Note:

There is a non-standard/funky : before the device path, it is not a typo!

The mount options are different on different systems.

The namespace option is required for SD4H/Juno while other options are performance tweaks.

- It can also be done from the command line

sudo mount -t ceph :/volumes/_nogroup/f6cb8f06-f0a4-4b88-b261-f8bd6b03582c /cephfs/ -o name=MyCephFS-RW

sudo mount -t ceph :/volumes/_nogroup/f6cb8f06-f0a4-4b88-b261-f8bd6b03582c /cephfs/ -o name=MyCephFS-RW,mds_namespace=cephfs_4_2,x-systemd.device-timeout=30,x-systemd.mount-timeout=30,noatime,_netdev,rw

- Or via ceph-fuse if the file system needs to be mounted in user space

- No funky

:here

Install the ceph-fuse lib

sudo dnf install ceph-fuse

Let the fuse mount be accessible in userspace by uncommenting user_allow_other in the fuse.conf file.

# mount_max = 1000

user_allow_other

You can now mount cephFS in a user’s home:

mkdir ~/my_cephfs

ceph-fuse my_cephfs/ --id=MyCephFS-RW --conf=~/ceph.conf --keyring=~/ceph.keyring --client-mountpoint=/volumes/_nogroup/f6cb8f06-f0a4-4b88-b261-f8bd6b03582c

Note that the client name is here the --id. The ceph.conf and ceph.keyring content are exactly the same as for the ceph kernel mount.

Notes

- A particular share can have more than one user key provisioned for it.

- This allows a more granular access to the filesystem, for example, if you needed some hosts to only access the filesystem in a read-only capacity.

- If you have multiple keys for a share, you can add the extra keys to your host and modify the above mounting procedure.

- This service is not available to hosts outside of the OpenStack cluster.