ARM software: Difference between revisions

No edit summary |

No edit summary |

||

| (35 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

<languages /> | <languages /> | ||

[[Category:Software]] | [[Category:Software]] [[Category:Pages with video links]] | ||

<translate> | <translate> | ||

= Introduction = <!--T:1--> | = Introduction = <!--T:1--> | ||

<!--T:2--> | <!--T:2--> | ||

[https://www.arm.com/products/development-tools/hpc-tools/cross-platform/forge/ddt ARM DDT] (formerly know as Allinea DDT) is a powerful commercial parallel debugger with a graphical user interface. It can be used to debug serial, MPI, multi-threaded, and CUDA programs, or any combination of the above, written in C, C++, and FORTRAN. [https://www.arm.com/products/development-tools/hpc-tools/cross-platform/forge/map MAP] | [https://www.arm.com/products/development-tools/hpc-tools/cross-platform/forge/ddt ARM DDT] (formerly know as Allinea DDT) is a powerful commercial parallel debugger with a graphical user interface. It can be used to debug serial, MPI, multi-threaded, and CUDA programs, or any combination of the above, written in C, C++, and FORTRAN. [https://www.arm.com/products/development-tools/hpc-tools/cross-platform/forge/map MAP]—an efficient parallel profiler—is another very useful tool from ARM (formerly Allinea). | ||

<!--T:3--> | <!--T:3--> | ||

The following modules are available on Graham: | |||

* | * ddt-cpu, for CPU debugging and profiling; | ||

* | * ddt-gpu, for GPU or mixed CPU/GPU debugging. | ||

As this is a GUI application, | |||

<!--T:38--> | |||

The following module is available on Niagara: | |||

* ddt | |||

<!--T:39--> | |||

As this is a GUI application, log in using <code>ssh -Y</code>, and use an [[SSH|SSH client]] like [[Connecting with MobaXTerm|MobaXTerm]] (Windows) or [https://www.xquartz.org/ XQuartz] (Mac) to ensure proper X11 tunnelling. | |||

<!--T:4--> | <!--T:4--> | ||

| Line 17: | Line 23: | ||

<!--T:5--> | <!--T:5--> | ||

The current license limits the use of DDT/MAP to a maximum of 512 CPU cores across all users at any given time | The current license limits the use of DDT/MAP to a maximum of 512 CPU cores across all users at any given time, while DDT-GPU is limited to 8 GPUs. | ||

= Usage = <!--T:6--> | = Usage = <!--T:6--> | ||

| Line 23: | Line 29: | ||

<!--T:7--> | <!--T:7--> | ||

Allocate the node or nodes on which to do the debugging or profiling | 1. Allocate the node or nodes on which to do the debugging or profiling. This will open a shell session on the allocated node. | ||

<!--T:8--> | <!--T:8--> | ||

salloc --x11 --time=0-1:00 --mem-per-cpu=4G --ntasks=4 | salloc --x11 --time=0-1:00 --mem-per-cpu=4G --ntasks=4 | ||

<!--T:9--> | <!--T:9--> | ||

2. Load the appropriate module, for example | |||

<!--T:10--> | <!--T:10--> | ||

module load ddt-cpu | |||

module load | |||

<!--T:13--> | <!--T:13--> | ||

3. Run the ddt or map command. | |||

<!--T:14--> | <!--T:14--> | ||

ddt path/to/code | ddt path/to/code | ||

map path/to/code | |||

<!--T:15--> | <!--T:15--> | ||

Make sure the MPI implementation is the default | :: Make sure the MPI implementation is the default OpenMPI in the DDT/MAP application window, before pressing the ''Run'' button. If this is not the case, press the ''Change'' button next to the ''Implementation:'' string, and select the correct option from the drop-down menu. Also, specify the desired number of cpu cores in this window. | ||

<!--T:16--> | <!--T:16--> | ||

When done, exit the shell | 4. When done, exit the shell to terminate the allocation. | ||

<!--T:34--> | |||

IMPORTANT: The current versions of DDT and OpenMPI have a compatibility issue which breaks the important feature of DDT - displaying message queues (available from the "Tools" drop down menu). There is a workaround: before running DDT, you have to execute the following command: | |||

<!--T:35--> | |||

$ export OMPI_MCA_pml=ob1 | |||

<!--T:36--> | |||

Be aware that the above workaround can make your MPI code run slower, so only use this trick when debugging. | |||

== CUDA code == <!--T:17--> | == CUDA code == <!--T:17--> | ||

<!--T:18--> | <!--T:18--> | ||

Allocate the node or nodes on which to do the debugging or profiling with <code>salloc</code> | 1. Allocate the node or nodes on which to do the debugging or profiling with <code>salloc</code>. This will open a shell session on the allocated node. | ||

<!--T:19--> | <!--T:19--> | ||

salloc --x11 --time=0-1:00 --mem-per-cpu=4G --ntasks=1 --gres=gpu:1 | salloc --x11 --time=0-1:00 --mem-per-cpu=4G --ntasks=1 --gres=gpu:1 | ||

<!--T:20--> | <!--T:20--> | ||

2. Load the appropriate module, for example | |||

<!--T:21--> | <!--T:21--> | ||

module load | module load ddt-gpu | ||

<!--T:22--> | <!--T:22--> | ||

This may fail with a suggestion to load an older version of OpenMPI first. | :: This may fail with a suggestion to load an older version of OpenMPI first. In this case, reload the OpenMPI module with the suggested command, and then reload the ddt-gpu module. | ||

<!--T:23--> | <!--T:23--> | ||

module load openmpi/2.0.2 | module load openmpi/2.0.2 | ||

module load ddt-gpu | |||

<!--T:24--> | <!--T:24--> | ||

Ensure a cuda module is loaded | 3. Ensure a cuda module is loaded. | ||

<!--T:25--> | <!--T:25--> | ||

module load cuda | module load cuda | ||

<!--T:26--> | <!--T:26--> | ||

4. Run the ddt command. | |||

<!--T:27--> | <!--T:27--> | ||

ddt path/to/code | ddt path/to/code | ||

<!--T:40--> | |||

If DDT complains about the mismatch between the CUDA driver and toolkit version, execute the following command and the run DDT again (use the version in this command), e.g. | |||

<!--T:41--> | |||

export ALLINEA_FORCE_CUDA_VERSION=10.1 | |||

<!--T:28--> | <!--T:28--> | ||

When done, exit the shell. This will | 5. When done, exit the shell to terminate the allocation. | ||

== Using VNC to fix the lag == <!--T:51--> | |||

<!--T:52--> | |||

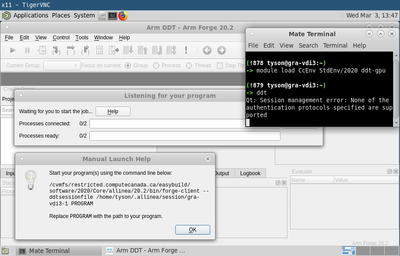

[[File:DDT-VNC-1.png|400px|thumb|right|DDT on '''gra-vdi.computecanada.ca''']] | |||

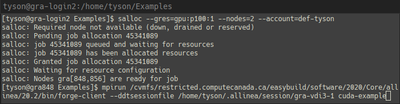

[[File:DDT-VNC-2.png|400px|thumb|right|Program on '''graham.computecanada.ca''']] | |||

<!--T:53--> | |||

The instructions above use X11 forwarding. X11 is very sensitive to packet latency. As a result, unless you happen to be on the same campus as the computer cluster, the ddt interface will likely be laggy and frustrating to use. This can be fixed by running ddt under VNC. | |||

<!--T:54--> | |||

To do this, follow the directions on our [[VNC|VNC page]] to setup a VNC session. If your VNC session is on the compute node, then you can directly start your program under ddt as above. If you VNC session is on the login node or you are using the graham vdi node, then you need to manual launch the job as follows. From the ddt startup screen | |||

<!--T:55--> | |||

* pick the ''manually launch backend yourself'' job start option, | |||

* enter the appropriate information for your job and press the ''listen'' button, and | |||

* press the ''help'' button to the right of ''waiting for you to start the job...''. | |||

<!--T:56--> | |||

This will then give you the command you need to run to start your job. Allocate a job on the cluster and start your program as directed. An example of doing this would be (where $USER is your username and $PROGAM ... is the command to start your program) | |||

<!--T:57--> | |||

<source lang="bash">[name@cluster-login:~]$ salloc ... | |||

[name@cluster-node:~]$ /cvmfs/restricted.computecanada.ca/easybuild/software/2020/Core/allinea/20.2/bin/forge-client --ddtsessionfile /home/$USER/.allinea/session/gra-vdi3-1 $PROGRAM ... | |||

</source> | |||

= Known issues = <!--T:33--> | |||

<!--T:42--> | |||

On graham, if you are experiencing issues with getting X11 to work, change permissions on your home directory so that only you have access. | |||

<!--T:43--> | |||

First, check (and record if needed) current permissions with | |||

<!--T:44--> | |||

ls -ld /home/$USER | |||

<!--T:45--> | |||

The output should begin with: | |||

<!--T:46--> | |||

drwx------ | |||

<!--T:47--> | |||

If some of the dashes are replaced by letters, that means your group and other users have read, write (unlikely), or execute permissions on your directory. | |||

<!--T:48--> | |||

This command will work to remove read and execute permissions for group and other users: | |||

<!--T:49--> | |||

chmod go-rx /home/$USER | |||

<!--T:50--> | |||

After you are done using DDT, you can if you like restore permissions to what they were (assuming you recorded them). More information on how to do this can be found on page [[Sharing_data]]. | |||

= | = See also = <!--T:37--> | ||

* | * [https://youtu.be/Q8HwLg22BpY "Debugging your code with DDT"], video, 55 minutes. | ||

* [[Parallel Debugging with DDT|A short DDT tutorial.]] | |||

</translate> | </translate> | ||

Latest revision as of 19:16, 15 July 2024

Introduction[edit]

ARM DDT (formerly know as Allinea DDT) is a powerful commercial parallel debugger with a graphical user interface. It can be used to debug serial, MPI, multi-threaded, and CUDA programs, or any combination of the above, written in C, C++, and FORTRAN. MAP—an efficient parallel profiler—is another very useful tool from ARM (formerly Allinea).

The following modules are available on Graham:

- ddt-cpu, for CPU debugging and profiling;

- ddt-gpu, for GPU or mixed CPU/GPU debugging.

The following module is available on Niagara:

- ddt

As this is a GUI application, log in using ssh -Y, and use an SSH client like MobaXTerm (Windows) or XQuartz (Mac) to ensure proper X11 tunnelling.

Both DDT and MAP are normally used interactively through their GUI, which is normally accomplished using the salloc command (see below for details). MAP can also be used non-interactively, in which case it can be submitted to the scheduler with the sbatch command.

The current license limits the use of DDT/MAP to a maximum of 512 CPU cores across all users at any given time, while DDT-GPU is limited to 8 GPUs.

Usage[edit]

CPU-only code, no GPUs[edit]

1. Allocate the node or nodes on which to do the debugging or profiling. This will open a shell session on the allocated node.

salloc --x11 --time=0-1:00 --mem-per-cpu=4G --ntasks=4

2. Load the appropriate module, for example

module load ddt-cpu

3. Run the ddt or map command.

ddt path/to/code map path/to/code

- Make sure the MPI implementation is the default OpenMPI in the DDT/MAP application window, before pressing the Run button. If this is not the case, press the Change button next to the Implementation: string, and select the correct option from the drop-down menu. Also, specify the desired number of cpu cores in this window.

4. When done, exit the shell to terminate the allocation.

IMPORTANT: The current versions of DDT and OpenMPI have a compatibility issue which breaks the important feature of DDT - displaying message queues (available from the "Tools" drop down menu). There is a workaround: before running DDT, you have to execute the following command:

$ export OMPI_MCA_pml=ob1

Be aware that the above workaround can make your MPI code run slower, so only use this trick when debugging.

CUDA code[edit]

1. Allocate the node or nodes on which to do the debugging or profiling with salloc. This will open a shell session on the allocated node.

salloc --x11 --time=0-1:00 --mem-per-cpu=4G --ntasks=1 --gres=gpu:1

2. Load the appropriate module, for example

module load ddt-gpu

- This may fail with a suggestion to load an older version of OpenMPI first. In this case, reload the OpenMPI module with the suggested command, and then reload the ddt-gpu module.

module load openmpi/2.0.2 module load ddt-gpu

3. Ensure a cuda module is loaded.

module load cuda

4. Run the ddt command.

ddt path/to/code

If DDT complains about the mismatch between the CUDA driver and toolkit version, execute the following command and the run DDT again (use the version in this command), e.g.

export ALLINEA_FORCE_CUDA_VERSION=10.1

5. When done, exit the shell to terminate the allocation.

Using VNC to fix the lag[edit]

The instructions above use X11 forwarding. X11 is very sensitive to packet latency. As a result, unless you happen to be on the same campus as the computer cluster, the ddt interface will likely be laggy and frustrating to use. This can be fixed by running ddt under VNC.

To do this, follow the directions on our VNC page to setup a VNC session. If your VNC session is on the compute node, then you can directly start your program under ddt as above. If you VNC session is on the login node or you are using the graham vdi node, then you need to manual launch the job as follows. From the ddt startup screen

- pick the manually launch backend yourself job start option,

- enter the appropriate information for your job and press the listen button, and

- press the help button to the right of waiting for you to start the job....

This will then give you the command you need to run to start your job. Allocate a job on the cluster and start your program as directed. An example of doing this would be (where $USER is your username and $PROGAM ... is the command to start your program)

[name@cluster-login:~]$ salloc ...

[name@cluster-node:~]$ /cvmfs/restricted.computecanada.ca/easybuild/software/2020/Core/allinea/20.2/bin/forge-client --ddtsessionfile /home/$USER/.allinea/session/gra-vdi3-1 $PROGRAM ...

Known issues[edit]

On graham, if you are experiencing issues with getting X11 to work, change permissions on your home directory so that only you have access.

First, check (and record if needed) current permissions with

ls -ld /home/$USER

The output should begin with:

drwx------

If some of the dashes are replaced by letters, that means your group and other users have read, write (unlikely), or execute permissions on your directory.

This command will work to remove read and execute permissions for group and other users:

chmod go-rx /home/$USER

After you are done using DDT, you can if you like restore permissions to what they were (assuming you recorded them). More information on how to do this can be found on page Sharing_data.

See also[edit]

- "Debugging your code with DDT", video, 55 minutes.

- A short DDT tutorial.