Managing your cloud resources with OpenStack: Difference between revisions

(style editing continued) |

(East->Beluga cloud) |

||

| (253 intermediate revisions by 13 users not shown) | |||

| Line 1: | Line 1: | ||

<languages /> | |||

<translate> | |||

<!--T:1--> | |||

<i>Parent page: [[Cloud]]</i> | |||

<!--T:2--> | |||

OpenStack is the software suite used on our clouds to control hardware resources such as computers, storage and networking. It allows the creation and management of virtual machines ("VMs" or "instances"), which act like separate individual machines, by emulation in software. This allows complete control over the computing environment, from choosing an operating system to software installation and configuration. Diverse use cases are supported, from hosting websites to creating virtual clusters. More documentation on OpenStack can be found at the [http://docs.openstack.org/ OpenStack website]. | |||

<!--T:63--> | |||

This page describes how to perform common tasks encountered while working with OpenStack. It is assumed that you have already read [[Cloud Quick Start]] and understand the basic operations of launching and connecting to a VM. Most tasks can be performed using either the dashboard (as described below), the [[OpenStack command line clients]], or a tool called [[Terraform]]; however, some tasks require using command line tools, for example [[Working_with_images#Sharing_an_image_with_another_project|sharing an image with another project]]. | |||

== | =Working with the dashboard= <!--T:91--> | ||

The web browser user interface used to manage your cloud resources, as described in most of our documentation, is referred to as the "dashboard". The dashboard is developed under an OpenStack sub-project referred to as Horizon. Horizon and dashboard might be used interchangeably. The dashboard is well documented [https://docs.openstack.org/horizon/latest/ here]. This documentation lists all of the options available on the dashboard, what they do, and how to navigate the system. | |||

=Projects= <!--T:64--> | |||

OpenStack projects group VMs together and provide a quota out of which VMs and related resources can be created. A project is unique to a particular cloud. All accounts which are members of a project have the same level of permissions, meaning anyone can create or delete a VM within a project if they are a member. You can view the projects you are a member of by logging into an OpenStack dashboard for the clouds you have access to (see [[Cloud#Cloud_systems|Cloud systems]] for a list of cloud URLs). The active <b>project name</b> will be displayed in the top left of the dashboard, to the right of the cloud logo. If you are a member of more than one project, you can switch between active projects by clicking on the drop-down menu and selecting the project's name. | |||

<!--T:74--> | |||

Depending on your allocation, your project may be limited to certain types of VM [[Virtual_machine_flavors|flavors]]. For example, compute allocations will generally only allow "c" flavors, while persistent allocations will generally only allow "p" flavors. | |||

<!--T:75--> | |||

Projects can be thought of as owned by primary investigators (PIs) and new projects and quota adjustments can only be requested by PIs. In addition, request for access to an existing project must be confirmed by the PI owning the project. | |||

=Working with volumes= <!--T:8--> | |||

Please see [[Working_with_volumes|this page]] for more information about creating and managing storage volumes. | |||

== | =Working with images= <!--T:42--> | ||

Please see [[Working_with_images|this page]] for more information about creating and managing disk image files. | |||

== | = Working with VMs= <!--T:69--> | ||

Please see [[Working_with_VMs|this page]] for more information about managing certain characteristics of your VMs in the dashboard. | |||

< | =Availability zones= <!--T:72--> | ||

< | Availability zones allow you to indicate what group of physical hardware you would like your VM to run on. On Beluga and Graham clouds, there is only one availability zone, <i>nova</i>, so there isn't any choice in the matter. However, on Arbutus there are three availability zones: <i>Compute</i>, <i>Persistent_01</i>, and <i>Persistent_02</i>. The <i>Compute</i> and <i>Persistent</i> zones only run compute or persistent flavors respectively (see [[Virtual machine flavors]]). Using two persistent zones can present an advantage; for example, two instances of a website can run in two different zones to ensure its continuous availability in the case where one of the sites goes down. | ||

< | |||

=Security groups= <!--T:3--> | |||

A security group is a set of rules to control network traffic into and out of your virtual machines. To manage security groups, go to <i>Project->Network->Security Groups</i>. You will see a list of currently defined security groups. If you have not previously defined any security groups, there will be a single default security group. | |||

< | <!--T:4--> | ||

To add or remove rules from a security group, click <i>Manage Rules</i> beside that group. When the group description is displayed, you can add or remove rules by clicking the <i>+Add Rule</i> and <i>Delete Rule</i> buttons. | |||

< | |||

</ | |||

== Default security group == <!--T:5--> | |||

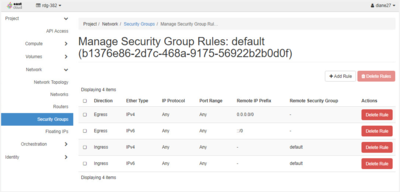

[[File:Default_security_group_EN.png|400px|thumb| Default Security Group Rules (Click for larger image)]] | |||

The <b>default security group</b> contains rules which allow a VM access out to the internet, for example to download operating system upgrades or package installations, but does not allow another machine to access it, except for other VMs belonging to the same default security group. We recommend you do not remove rules from the default security group as this may cause problems when creating new VMs. The image on the right shows the default security group rules that should be present: | |||

* 2 Egress rules to allow your instance to access an outside network without any limitation; there is one rule for IPV4 and one for IPV6. | |||

* 2 Ingress rules to allow communication for all the VMs that belong to that security group, for both IPV4 and IPV6. | |||

It is safe to add rules to the default security group and you may recall that we did this in [[Cloud Quick Start]] by either adding security rules for [[Cloud_Quick_Start#Network_settings|SSH]] or [[Cloud_Quick_Start#FCreating_your_first_virtual_machine|RDP (see <i>Firewall, add rules to allow RDP</i> under the Windows tab)]] to your default security group so that you could connect to your VM. | |||

== Managing security groups == <!--T:6--> | |||

You can define multiple security groups and a VM can belong to more than one security group. When deciding on how to manage your security groups and rules, think carefully about what needs to be accessed and who needs to access it. Strive to minimize the IP addresses and ports in your Ingress rules. For example, if you will always be connecting to your VM via SSH from the same computer with a static IP, it makes sense to allow SSH access only from that IP. To specify the allowed IP or IP range, use the [[OpenStack#Using_CIDR_rules|CIDR]] box (use this web-based tool for converting [http://www.ipaddressguide.com/cidr IP ranges to CIDR] rules). Further, if you only need to connect to one VM via SSH from the outside and then can use that as a gateway to any other cloud VMs, it makes sense to put the SSH rule in a separate security group and add that group only to the gateway VM. However, you will also need to ensure your SSH keys are configured correctly to allow you to use SSH between VMs (see [[SSH Keys]]). In addition to CIDR, security rules can be limited within a project using security groups. For example, you can configure a security rule for a VM in your project running a MySQL Database to be accessible from other VMs in the default security group. | |||

<!--T:7--> | |||

The security groups a VM belongs to can be chosen when it is created on the <i>Launch Instance</i> with the <i>Security Groups</i> option, or after the VM has been launched by selecting <i>Edit Security Groups</i> from the drop-down menu of actions for the VM on the <i>Project->Compute->Instances</i> page. | |||

== | ==Using CIDR rules== <!--T:67--> | ||

CIDR stands for Classless Inter-Domain Routing and is a standardized way of defining IP ranges (see also this Wikipedia page on [https://en.wikipedia.org/wiki/Classless_Inter-Domain_Routing CIDR]). | |||

<!--T:68--> | |||

An example of a CIDR rule is <code>192.168.1.1/24</code>. This looks just like a normal IP address with a <code>/24</code> appended to it. IP addresses are made up of 4, 1-byte (8 bits) numbers ranging from 0 to 255. What this <code>/24</code> means is that this CIDR rule will match the first left most 24 bits (3 bytes) of an IP address. In this case, any IP address starting with <code>192.168.1</code> will match this CIDR rule. If <code>/32</code> is appended, the full 32 bits of the IP address must match exactly; if <code>/0</code> is appended, no bits must match and therefore any IP address will match it. | |||

=Working with cloudInit= <!--T:89--> | |||

<!--T:90--> | |||

<b>The first time your instance is launched</b>, you can customize it using cloudInit. This can be done either | |||

* via the OpenStack command-line interface, or | |||

* by pasting your cloudInit script in the <i>Customization Script</i> field of the OpenStack dashboard (<i>Project-->Compute-->Instances-->Launch instance</i> button, <i>Configuration</i> option). | |||

== | ==Add users with cloudInit during VM creation== <!--T:30--> | ||

[[File:VM multi user cloud init.png|400px|thumb| Cloud init to add multiple users (Click for larger image)]] | |||

Alternatively, you can do this during the creation of a VM using [http://cloudinit.readthedocs.org/en/latest/index.html# cloudInit]. The following cloudInit script adds two users <code>gretzky</code> and <code>lemieux</code> with and without sudo permissions respectively. | |||

<!--T:31--> | |||

#cloud-config | |||

users: | |||

- name: gretzky | |||

shell: /bin/bash | |||

sudo: ALL=(ALL) NOPASSWD:ALL | |||

ssh_authorized_keys: | |||

- <Gretzky's public key goes here> | |||

- name: lemieux | |||

shell: /bin/bash | |||

ssh_authorized_keys: | |||

- <Lemieux's public key goes here> | |||

<!--T:32--> | |||

For more about the YAML format used by cloudInit, see [http://www.yaml.org/spec/1.2/spec.html#Preview YAML Preview]. Note that YAML is very picky about white space formatting, so that there must be a space after the "-" before your public key string. Also, this configuration overwrites the default user that is added when no cloudInit script is specified, so the users listed in this configuration script will be the <i>only</i> users on the newly created VM. It is therefore vital to have at least one user with sudo permission. More users can be added by simply including more <code>- name: username</code> sections. | |||

<!--T:33--> | |||

If you wish to preserve the default user created by the distribution (users <code>debian, centos, ubuntu,</code> <i>etc.</i>), use the following form: | |||

<!--T:34--> | |||

#cloud-config | #cloud-config | ||

users: | users: | ||

- name: | - default | ||

- name: gretzky | |||

shell: /bin/bash | shell: /bin/bash | ||

sudo: ALL=(ALL) NOPASSWD:ALL | sudo: ALL=(ALL) NOPASSWD:ALL | ||

ssh_authorized_keys: | ssh_authorized_keys: | ||

- < | - <Gretzky's public key goes here> | ||

- name: | - name: lemieux | ||

shell: /bin/bash | shell: /bin/bash | ||

ssh_authorized_keys: | ssh_authorized_keys: | ||

- < | - <Lemieux's public key goes here> | ||

After the VM has finished spawning, | <!--T:35--> | ||

After the VM has finished spawning, look at the log to ensure that the public keys have been added correctly for those users. The log can be found by clicking on the name of the instance on the "Compute->Instances" panel and then selecting the "log" tab. The log should show something like this: | |||

ci-info: ++++++++Authorized keys from /home/ | <!--T:36--> | ||

ci-info: ++++++++Authorized keys from /home/gretzky/.ssh/authorized_keys for user gretzky++++++++ | |||

ci-info: +---------+-------------------------------------------------+---------+------------------+ | ci-info: +---------+-------------------------------------------------+---------+------------------+ | ||

ci-info: | Keytype | Fingerprint (md5) | Options | Comment | | ci-info: | Keytype | Fingerprint (md5) | Options | Comment | | ||

| Line 104: | Line 113: | ||

ci-info: | ssh-rsa | ad:a6:35:fc:2a:17:c9:02:cd:59:38:c9:18:dd:15:19 | - | rsa-key-20160229 | | ci-info: | ssh-rsa | ad:a6:35:fc:2a:17:c9:02:cd:59:38:c9:18:dd:15:19 | - | rsa-key-20160229 | | ||

ci-info: +---------+-------------------------------------------------+---------+------------------+ | ci-info: +---------+-------------------------------------------------+---------+------------------+ | ||

ci-info: ++++++++++++Authorized keys from /home/ | ci-info: ++++++++++++Authorized keys from /home/lemieux/.ssh/authorized_keys for user lemieux++++++++++++ | ||

ci-info: +---------+-------------------------------------------------+---------+------------------+ | ci-info: +---------+-------------------------------------------------+---------+------------------+ | ||

ci-info: | Keytype | Fingerprint (md5) | Options | Comment | | ci-info: | Keytype | Fingerprint (md5) | Options | Comment | | ||

| Line 111: | Line 120: | ||

ci-info: +---------+-------------------------------------------------+---------+------------------+ | ci-info: +---------+-------------------------------------------------+---------+------------------+ | ||

Once this is done, users can log into the VM with their private keys as usual (see [[ | <!--T:37--> | ||

Once this is done, users can log into the VM with their private keys as usual (see [[SSH Keys]]). | |||

<!--T:49--> | |||

[[Category:Cloud]] | |||

</translate> | |||

Latest revision as of 20:41, 7 July 2023

Parent page: Cloud

OpenStack is the software suite used on our clouds to control hardware resources such as computers, storage and networking. It allows the creation and management of virtual machines ("VMs" or "instances"), which act like separate individual machines, by emulation in software. This allows complete control over the computing environment, from choosing an operating system to software installation and configuration. Diverse use cases are supported, from hosting websites to creating virtual clusters. More documentation on OpenStack can be found at the OpenStack website.

This page describes how to perform common tasks encountered while working with OpenStack. It is assumed that you have already read Cloud Quick Start and understand the basic operations of launching and connecting to a VM. Most tasks can be performed using either the dashboard (as described below), the OpenStack command line clients, or a tool called Terraform; however, some tasks require using command line tools, for example sharing an image with another project.

Working with the dashboard

The web browser user interface used to manage your cloud resources, as described in most of our documentation, is referred to as the "dashboard". The dashboard is developed under an OpenStack sub-project referred to as Horizon. Horizon and dashboard might be used interchangeably. The dashboard is well documented here. This documentation lists all of the options available on the dashboard, what they do, and how to navigate the system.

Projects

OpenStack projects group VMs together and provide a quota out of which VMs and related resources can be created. A project is unique to a particular cloud. All accounts which are members of a project have the same level of permissions, meaning anyone can create or delete a VM within a project if they are a member. You can view the projects you are a member of by logging into an OpenStack dashboard for the clouds you have access to (see Cloud systems for a list of cloud URLs). The active project name will be displayed in the top left of the dashboard, to the right of the cloud logo. If you are a member of more than one project, you can switch between active projects by clicking on the drop-down menu and selecting the project's name.

Depending on your allocation, your project may be limited to certain types of VM flavors. For example, compute allocations will generally only allow "c" flavors, while persistent allocations will generally only allow "p" flavors.

Projects can be thought of as owned by primary investigators (PIs) and new projects and quota adjustments can only be requested by PIs. In addition, request for access to an existing project must be confirmed by the PI owning the project.

Working with volumes

Please see this page for more information about creating and managing storage volumes.

Working with images

Please see this page for more information about creating and managing disk image files.

Working with VMs

Please see this page for more information about managing certain characteristics of your VMs in the dashboard.

Availability zones

Availability zones allow you to indicate what group of physical hardware you would like your VM to run on. On Beluga and Graham clouds, there is only one availability zone, nova, so there isn't any choice in the matter. However, on Arbutus there are three availability zones: Compute, Persistent_01, and Persistent_02. The Compute and Persistent zones only run compute or persistent flavors respectively (see Virtual machine flavors). Using two persistent zones can present an advantage; for example, two instances of a website can run in two different zones to ensure its continuous availability in the case where one of the sites goes down.

Security groups

A security group is a set of rules to control network traffic into and out of your virtual machines. To manage security groups, go to Project->Network->Security Groups. You will see a list of currently defined security groups. If you have not previously defined any security groups, there will be a single default security group.

To add or remove rules from a security group, click Manage Rules beside that group. When the group description is displayed, you can add or remove rules by clicking the +Add Rule and Delete Rule buttons.

Default security group

The default security group contains rules which allow a VM access out to the internet, for example to download operating system upgrades or package installations, but does not allow another machine to access it, except for other VMs belonging to the same default security group. We recommend you do not remove rules from the default security group as this may cause problems when creating new VMs. The image on the right shows the default security group rules that should be present:

- 2 Egress rules to allow your instance to access an outside network without any limitation; there is one rule for IPV4 and one for IPV6.

- 2 Ingress rules to allow communication for all the VMs that belong to that security group, for both IPV4 and IPV6.

It is safe to add rules to the default security group and you may recall that we did this in Cloud Quick Start by either adding security rules for SSH or RDP (see Firewall, add rules to allow RDP under the Windows tab) to your default security group so that you could connect to your VM.

Managing security groups

You can define multiple security groups and a VM can belong to more than one security group. When deciding on how to manage your security groups and rules, think carefully about what needs to be accessed and who needs to access it. Strive to minimize the IP addresses and ports in your Ingress rules. For example, if you will always be connecting to your VM via SSH from the same computer with a static IP, it makes sense to allow SSH access only from that IP. To specify the allowed IP or IP range, use the CIDR box (use this web-based tool for converting IP ranges to CIDR rules). Further, if you only need to connect to one VM via SSH from the outside and then can use that as a gateway to any other cloud VMs, it makes sense to put the SSH rule in a separate security group and add that group only to the gateway VM. However, you will also need to ensure your SSH keys are configured correctly to allow you to use SSH between VMs (see SSH Keys). In addition to CIDR, security rules can be limited within a project using security groups. For example, you can configure a security rule for a VM in your project running a MySQL Database to be accessible from other VMs in the default security group.

The security groups a VM belongs to can be chosen when it is created on the Launch Instance with the Security Groups option, or after the VM has been launched by selecting Edit Security Groups from the drop-down menu of actions for the VM on the Project->Compute->Instances page.

Using CIDR rules

CIDR stands for Classless Inter-Domain Routing and is a standardized way of defining IP ranges (see also this Wikipedia page on CIDR).

An example of a CIDR rule is 192.168.1.1/24. This looks just like a normal IP address with a /24 appended to it. IP addresses are made up of 4, 1-byte (8 bits) numbers ranging from 0 to 255. What this /24 means is that this CIDR rule will match the first left most 24 bits (3 bytes) of an IP address. In this case, any IP address starting with 192.168.1 will match this CIDR rule. If /32 is appended, the full 32 bits of the IP address must match exactly; if /0 is appended, no bits must match and therefore any IP address will match it.

Working with cloudInit

The first time your instance is launched, you can customize it using cloudInit. This can be done either

- via the OpenStack command-line interface, or

- by pasting your cloudInit script in the Customization Script field of the OpenStack dashboard (Project-->Compute-->Instances-->Launch instance button, Configuration option).

Add users with cloudInit during VM creation

Alternatively, you can do this during the creation of a VM using cloudInit. The following cloudInit script adds two users gretzky and lemieux with and without sudo permissions respectively.

#cloud-config

users:

- name: gretzky

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

ssh_authorized_keys:

- <Gretzky's public key goes here>

- name: lemieux

shell: /bin/bash

ssh_authorized_keys:

- <Lemieux's public key goes here>

For more about the YAML format used by cloudInit, see YAML Preview. Note that YAML is very picky about white space formatting, so that there must be a space after the "-" before your public key string. Also, this configuration overwrites the default user that is added when no cloudInit script is specified, so the users listed in this configuration script will be the only users on the newly created VM. It is therefore vital to have at least one user with sudo permission. More users can be added by simply including more - name: username sections.

If you wish to preserve the default user created by the distribution (users debian, centos, ubuntu, etc.), use the following form:

#cloud-config

users:

- default

- name: gretzky

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

ssh_authorized_keys:

- <Gretzky's public key goes here>

- name: lemieux

shell: /bin/bash

ssh_authorized_keys:

- <Lemieux's public key goes here>

After the VM has finished spawning, look at the log to ensure that the public keys have been added correctly for those users. The log can be found by clicking on the name of the instance on the "Compute->Instances" panel and then selecting the "log" tab. The log should show something like this:

ci-info: ++++++++Authorized keys from /home/gretzky/.ssh/authorized_keys for user gretzky++++++++ ci-info: +---------+-------------------------------------------------+---------+------------------+ ci-info: | Keytype | Fingerprint (md5) | Options | Comment | ci-info: +---------+-------------------------------------------------+---------+------------------+ ci-info: | ssh-rsa | ad:a6:35:fc:2a:17:c9:02:cd:59:38:c9:18:dd:15:19 | - | rsa-key-20160229 | ci-info: +---------+-------------------------------------------------+---------+------------------+ ci-info: ++++++++++++Authorized keys from /home/lemieux/.ssh/authorized_keys for user lemieux++++++++++++ ci-info: +---------+-------------------------------------------------+---------+------------------+ ci-info: | Keytype | Fingerprint (md5) | Options | Comment | ci-info: +---------+-------------------------------------------------+---------+------------------+ ci-info: | ssh-rsa | ad:a6:35:fc:2a:17:c9:02:cd:59:38:c9:18:dd:15:19 | - | rsa-key-20160229 | ci-info: +---------+-------------------------------------------------+---------+------------------+

Once this is done, users can log into the VM with their private keys as usual (see SSH Keys).