Multi-Instance GPU: Difference between revisions

(Reviewed Available configurations) |

(Reviewed examples) |

||

| Line 42: | Line 42: | ||

Note: for the job scheduler on Narval, the prefix <code>a100_</code> is required before the profile name. | Note: for the job scheduler on Narval, the prefix <code>a100_</code> is required before the profile name. | ||

= | == Job examples == <!--T:10--> | ||

<!--T:11--> | <!--T:11--> | ||

Request | * Request a GPU instance of power 3/8 and size 20GB for a 1-hour interactive job: | ||

</translate> | </translate> | ||

{{Command2 | |||

|salloc --account{{=}}def-someuser --gres{{=}}gpu:a100_3g.20gb:1 --cpus-per-task{{=}}2 --mem{{=}}40gb --time{{=}}1:0:0 | |||

}} | |||

<translate> | <translate> | ||

<!--T:12--> | <!--T:12--> | ||

Request | * Request a GPU instance of power 4/8 and size 20GB for a 24-hour batch job using the maximum recommended number of cores and system memory: | ||

</translate> | </translate> | ||

| Line 60: | Line 62: | ||

|contents= | |contents= | ||

#!/bin/bash | #!/bin/bash | ||

#SBATCH -- | #SBATCH --account=def-someuser | ||

#SBATCH --gres=gpu:a100_4g.20gb:1 | #SBATCH --gres=gpu:a100_4g.20gb:1 | ||

#SBATCH --cpus-per-task=6 # There are 6 CPU cores per 3g.20gb and 4g.20gb on Narval. | #SBATCH --cpus-per-task=6 # There are 6 CPU cores per 3g.20gb and 4g.20gb on Narval. | ||

#SBATCH --mem= | #SBATCH --mem=62gb # There are 62GB GPU RAM per 3g.20gb and 4g.20gb on Narval. | ||

#SBATCH --time=24:00:00 | #SBATCH --time=24:00:00 | ||

hostname | hostname | ||

nvidia-smi | nvidia-smi | ||

}} | }} | ||

<translate> | <translate> | ||

= Finding which of your jobs to migrate to using a MIG = <!--T:13--> | = Finding which of your jobs to migrate to using a MIG = <!--T:13--> | ||

Revision as of 16:50, 13 August 2024

Introduction

Many programs are unable to fully use modern GPUs such as NVidia A100 and H100. Multi-Instance GPU (MIG) is a technology that allows partitioning a single GPU into multiple GPU instances, thus making each instance a completely independent GPU. Each of the multiple GPU instances would then have a certain slice of the GPU's computational resources and memory, all detached from the other instances by on-chip protections.

GPU instances can be less wasteful and their usage is billed accordingly. Jobs submitted on one of those instances will use less of your allocated priority compared to a full GPU. You will be able to execute more jobs and have shorter wait time.

Which jobs should use GPU instances instead of full GPUs?

Jobs that use less than half of the computing power of a GPU and less than half of the available GPU memory should be evaluated and tested on a GPU instance. In most cases, these jobs will run just as fast on a GPU instance and consume less than half of the computing resource.

Limitations

GPU instances do not support the CUDA Inter-Process Communication (IPC), which optimises data transfers between GPUs over NVLink and NVSwitch. This limitation also affects communications between GPU instances in a single GPU. Consequently, launching an executable on more than one GPU instance at a time does not improve performance and should be avoided.

GPU jobs requiring many CPU cores may also require a full GPU instead of a GPU instance. The maximum number of CPU cores per GPU instance depends on the number of cores per full GPU and on the configured MIG profiles. Both factors may vary between clusters and also between GPU nodes in a cluster.

Available configurations

As of July 30, 2024, only Narval has a few A100 nodes configured with MIG. While there exist many possible MIG configurations and profiles, only the two following profiles have been implemented on selected GPUs:

3g.20gb4g.20gb

The profile name describes the size of the GPU instance.

For example, a 3g.20gb instance has 20 GB of GPU RAM and offers 3/8 of the computing performance of a full A100-40gb GPU. Using less powerful MIG profiles will have a lower impact on your allocation and priority.

On Narval, the recommended maximum number of cores and amount of system memory per GPU instance are:

3g.20gb: maximum 6 cores and 62 GB4g.20gb: maximum 6 cores and 62 GB

To request a GPU instance of a certain profile, your job submission must include a --gres parameter:

3g.20gb:--gres=gpu:a100_3g.20gb:14g.20gb:--gres=gpu:a100_4g.20gb:1

Note: for the job scheduler on Narval, the prefix a100_ is required before the profile name.

Job examples

- Request a GPU instance of power 3/8 and size 20GB for a 1-hour interactive job:

[name@server ~]$ salloc --account=def-someuser --gres=gpu:a100_3g.20gb:1 --cpus-per-task=2 --mem=40gb --time=1:0:0

- Request a GPU instance of power 4/8 and size 20GB for a 24-hour batch job using the maximum recommended number of cores and system memory:

#!/bin/bash

#SBATCH --account=def-someuser

#SBATCH --gres=gpu:a100_4g.20gb:1

#SBATCH --cpus-per-task=6 # There are 6 CPU cores per 3g.20gb and 4g.20gb on Narval.

#SBATCH --mem=62gb # There are 62GB GPU RAM per 3g.20gb and 4g.20gb on Narval.

#SBATCH --time=24:00:00

hostname

nvidia-smi

Finding which of your jobs to migrate to using a MIG

You can find information on current and past jobs on the Narval usage portal, under the Job stats tab.

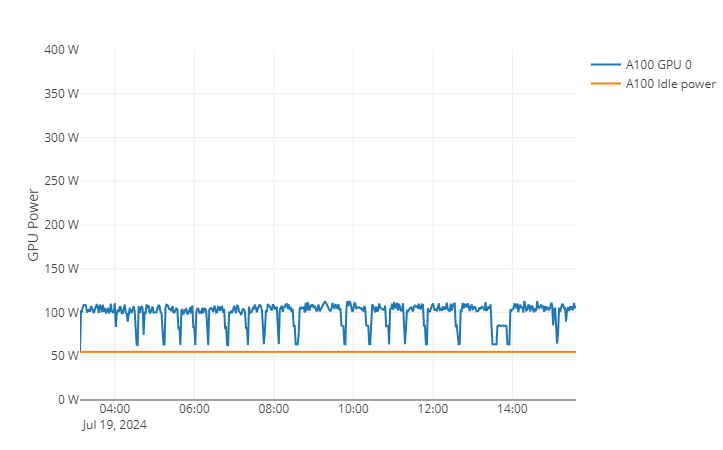

Power consumption is a good indicator of the total computing power requested from the GPU. For instance, the following job requested a full A100 GPU with a maximum TDP of 400W, but only used 100W on average, which is only 50W more than the idle electric consumption:

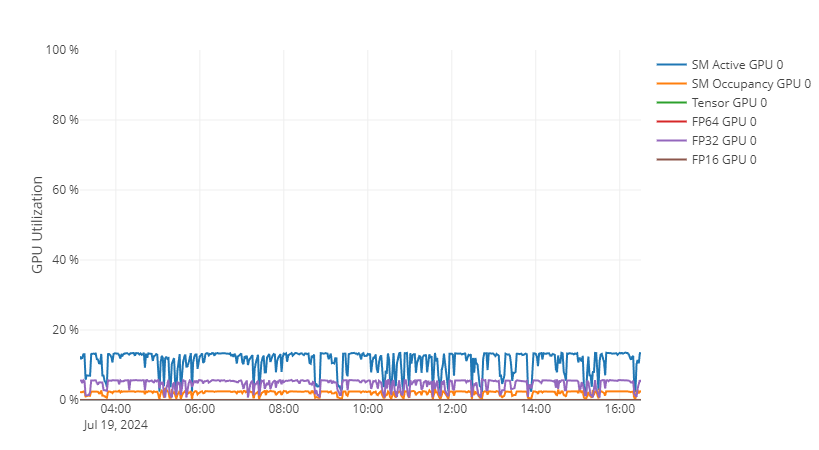

GPU functionality utilization may also provide insights on the usage of the GPU in cases where the power consumption is not sufficient. For this example job, GPU utilization graph supports the conclusion of the GPU power consumption graph that the job use less than 25% of the available power of a full A100 GPU:

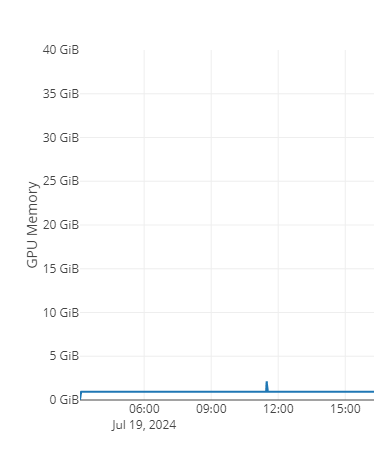

The final things to consider is the maximum amount of GPU memory and average number of CPU cores required to run the job. For this example, the job uses a maximum of 3GB of GPU memory out of the 40GB of a full A100 GPU.

It was also launched using a single CPU core. When taking into account these 3 metrics, we see that the job could easily run on a 3g.20GB or 4g.20GB MIG with power and memory to spare.

Another way to monitor the usage of a running job is by attaching to the node where the job is currently running and use nvidia-smi to read the GPU metrics in real time. This will not provide maximum and average values for memory and power of the full job, but may be helpful to troubleshoot jobs.