Allocations and compute scheduling: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

<languages /> | |||

<translate> | |||

{{Draft}} | {{Draft}} | ||

= What is an allocation? = | |||

'''An allocation is an amount of resources that a research group can target for use for a period of time, usually a year.''' This amount is either a maximum amount, as is the case for storage, or an average amount of usage over the period, as is the case for shared resources like computation cores. | |||

Allocations are usually made in terms of core years, GPU years, or storage space. Storage allocations are the most straightforward to understand: research groups will get a maximum amount of storage that they can use exclusively throughout the allocation period. Core year and GPU year allocations are more difficult to understand because these allocations are meant to capture average use throughout the allocation period---typically meant to be a year---and this use will occur across a set of resources shared with other research groups. | |||

The time period of an allocation when it is granted is an ideal, and the calculation of the average is applied to the actual period during which the resources were/are available. This means that if the allocation period was a year and the clusters were down for a week of maintenance, a research group would not be owed an additional week of resource usage. Conversely, if the allocation period were to be extended, research groups affected by such a change would not then owe or lose resource usage. | |||

It should be noted that in the case of core year and GPU year allocations, both of which target resource usage averages over time on shared resources, a research group is more likely to hit (or exceed) its target(s) if the resources are used evenly over the allocation period than if the resources are used in bursts or if use is put off until later in the allocation period. | |||

=How does scheduling work?= | |||

Compute-related resources granted by core-year and GPU-year allocations require research groups to submit what are referred to as “jobs” to a “scheduler”. A job is a combination of a computer program (an application) and a list of resources that the application is expected use. The [[What is a scheduler?|scheduler]] is a program that calculates the priority of each job submitted and provides the needed resources based on the priority of each job and the available resources. | |||

The scheduler uses prioritization algorithms to meet the allocation targets of all groups and it is based on a research group’s recent usage of the system as compared to their allocated usage on that system. The past of the allocation period is taken into account but the most weight is put on recent usage (or non-usage). The point of this is to allow a research group that matches their actual usage with their allocated amounts to operate roughly continuously at that level. This smooths resource usage over time across all groups and resources, allowing for it to be theoretically possible for all research groups to hit their allocation targets. | |||

=How does resource use affect priority?= | |||

The overarching principle governing the calculation of priority on Compute Canada's new national clusters is that compute-based jobs are considered in the calculation based on the resources that others are prevented from using and not on the resources actually used. | |||

The most common example of unused cores contributing to a priority calculation occurs when a submitted job requests multiple cores but uses fewer cores than requested when run. The usage that will affect the priority of future jobs is the number of cores requested, not the number of cores the application actually used. This is because the unused cores were unavailable to others to use during the job. | |||

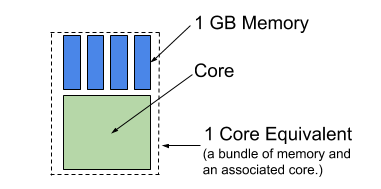

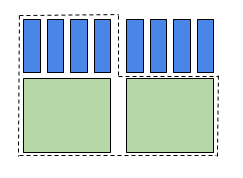

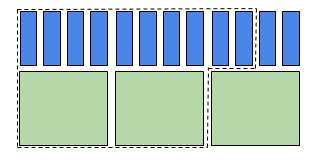

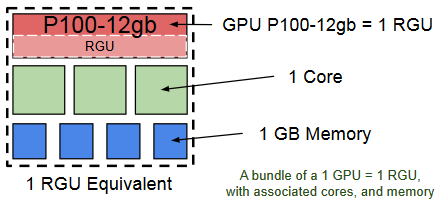

Another common case is when a job requests memory beyond what is associated with the cores available. If a cluster that has 4GB of memory associated with each core receives a job request for only a single core but 8GB of memory, then the job will be deemed to have used two cores. This is because other researchers were effectively prevented from using the second core because there was no memory available for it. | |||

The details of how resources are accounted for require a sound understanding of the core equivalent concept, which is discussed below.<ref>Further details about how priority is calculated are beyond the scope of this document. Additional documentation is in preparation. We also suggest that a [https://www.westgrid.ca/events/scheduling_job_management_how_get_most_cluster training course] might be valuable for anyone wishing to know more.</ref> | |||

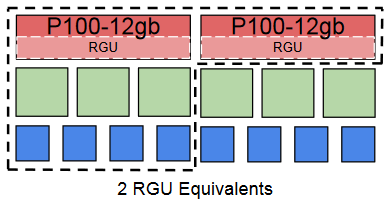

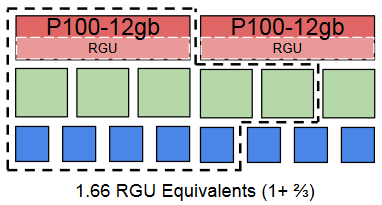

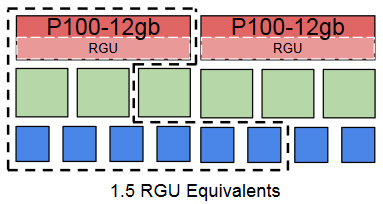

[[File:Core_equivalent_diagram_GP.png|frame|Figure 1 - Core equivalent diagram for Cedar, Graham, and Béluga.]] | [[File:Core_equivalent_diagram_GP.png|frame|Figure 1 - Core equivalent diagram for Cedar, Graham, and Béluga.]] | ||

| Line 14: | Line 43: | ||

[[File:GPU_and_a_half_(memory).png|frame|Figure 7 - 1.5 GPU equivalents, based on memory.]] | [[File:GPU_and_a_half_(memory).png|frame|Figure 7 - 1.5 GPU equivalents, based on memory.]] | ||

</translate> | |||

Revision as of 18:18, 16 March 2018

This is not a complete article: This is a draft, a work in progress that is intended to be published into an article, which may or may not be ready for inclusion in the main wiki. It should not necessarily be considered factual or authoritative.

What is an allocation?

An allocation is an amount of resources that a research group can target for use for a period of time, usually a year. This amount is either a maximum amount, as is the case for storage, or an average amount of usage over the period, as is the case for shared resources like computation cores.

Allocations are usually made in terms of core years, GPU years, or storage space. Storage allocations are the most straightforward to understand: research groups will get a maximum amount of storage that they can use exclusively throughout the allocation period. Core year and GPU year allocations are more difficult to understand because these allocations are meant to capture average use throughout the allocation period---typically meant to be a year---and this use will occur across a set of resources shared with other research groups.

The time period of an allocation when it is granted is an ideal, and the calculation of the average is applied to the actual period during which the resources were/are available. This means that if the allocation period was a year and the clusters were down for a week of maintenance, a research group would not be owed an additional week of resource usage. Conversely, if the allocation period were to be extended, research groups affected by such a change would not then owe or lose resource usage.

It should be noted that in the case of core year and GPU year allocations, both of which target resource usage averages over time on shared resources, a research group is more likely to hit (or exceed) its target(s) if the resources are used evenly over the allocation period than if the resources are used in bursts or if use is put off until later in the allocation period.

How does scheduling work?

Compute-related resources granted by core-year and GPU-year allocations require research groups to submit what are referred to as “jobs” to a “scheduler”. A job is a combination of a computer program (an application) and a list of resources that the application is expected use. The scheduler is a program that calculates the priority of each job submitted and provides the needed resources based on the priority of each job and the available resources.

The scheduler uses prioritization algorithms to meet the allocation targets of all groups and it is based on a research group’s recent usage of the system as compared to their allocated usage on that system. The past of the allocation period is taken into account but the most weight is put on recent usage (or non-usage). The point of this is to allow a research group that matches their actual usage with their allocated amounts to operate roughly continuously at that level. This smooths resource usage over time across all groups and resources, allowing for it to be theoretically possible for all research groups to hit their allocation targets.

How does resource use affect priority?

The overarching principle governing the calculation of priority on Compute Canada's new national clusters is that compute-based jobs are considered in the calculation based on the resources that others are prevented from using and not on the resources actually used.

The most common example of unused cores contributing to a priority calculation occurs when a submitted job requests multiple cores but uses fewer cores than requested when run. The usage that will affect the priority of future jobs is the number of cores requested, not the number of cores the application actually used. This is because the unused cores were unavailable to others to use during the job.

Another common case is when a job requests memory beyond what is associated with the cores available. If a cluster that has 4GB of memory associated with each core receives a job request for only a single core but 8GB of memory, then the job will be deemed to have used two cores. This is because other researchers were effectively prevented from using the second core because there was no memory available for it.

The details of how resources are accounted for require a sound understanding of the core equivalent concept, which is discussed below.[1]

- ↑ Further details about how priority is calculated are beyond the scope of this document. Additional documentation is in preparation. We also suggest that a training course might be valuable for anyone wishing to know more.