VASP

- The Vienna ab initio Simulation Package (VASP) is a computer program for atomic scale materials modelling, e.g. electronic structure calculations and quantum mechanical molecular dynamics, from first principles.

- Reference: VASP website

Licensing[edit]

If you wish to use the prebuilt VASP binaries on Cedar and/or Graham, you must contact Technical support requesting access to VASP with the following information:

- Include license holder (your PI) information:

- Name

- Email address

- Department and institution (university)

- Include license information:

- Version of the VASP license (VASP version 4 or version 5)

- License number

- Provide an updated list of who is allowed to use your VASP license. For example, forward to us the most recent email from the VASP license administrator that contains the list of licensed users.

If you are licensed for version 5 you may also use version 4, but a version 4 license does not permit you to use version 5.

Using prebuilt VASP[edit]

Prebuilt VASP binary files have been installed only on Cedar and Graham.

- Run

module spider vaspto see which versions are available. - Choose your version and run

module spider vasp/<version>to see which dependencies you need to load for this particular version. - Load the dependencies and the VASP module, for example:

module load intel/2020.1.217 intelmpi/2019.7.217 vasp/5.4.4

See Using modules for more information.

Pseudopotential files[edit]

All pseudopotentials have been downloaded from the official VASP website and untarred. They are all located in $EBROOTVASP/pseudopotentials/ on Cedar and Graham and can be accessed once the VASP module is loaded.

Executable programs[edit]

For VASP-4.6, executable files are:

vaspfor standard NVT calculations with non gamma k pointsvasp-gammafor standard NVT calculations with only gamma pointsmakeparamto estimate how much memory is required to run VASP for a particular cluster

For VASP-5.4.1, 5.4.4 and 6.1.0 (without CUDA support), executable files are:

vasp_stdfor standard NVT calculations with non gamma k pointsvasp_gamfor standard NVT calculations with only gamma pointsvasp_nclfor NPT calculations with non gamma k points

For VASP-5.4.4 and 6.1.0 (with CUDA support), executable files are:

vasp_gpufor standard NVT calculations with gamma and non gamma k pointsvasp_gpu_nclfor NPT calculations with gamma and non gamma k points

Two extensions have also been incorporated:

If you need a version of VASP that does not appear here, you can either build it yourself (see below) or write to us and ask that it be built and installed.

Building VASP on narval[edit]

If you are licensed to use VASP and have access to VASP source code you can install VASP in your home directory in the same way as it has been installed on cedar and graham, e.g., with two extension, Transition State Tools and VASPsol by running one of the following commands

eb VASP-5.4.4-iimpi-2020a.eb for VASP-5.4.4

eb VASP-6.1.2-iimpi-2020a.eb for VASP-6.1.2

eb VASP-6.2.1-iimpi-2020a.eb for VASP-6.2.1

The above commands should be run from the directory where vasp source files are located. The source files for vasp-5.4.4, 6.1.2 and 6.2.1 are vasp.5.4.4.pl2.tgz , vasp.6.1.2_patched.tgz and vasp.6.2.1.tgz respectively. Running those commands takes some time. Once it is done you will be able to load and run vasp using module command exactly as it has been explained in Using prebuilt VASP

Vasp-GPU[edit]

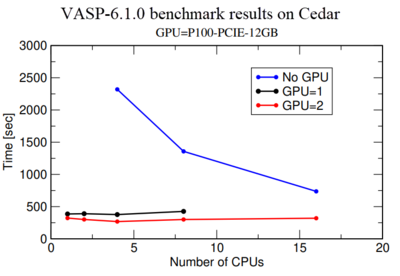

Vasp-GPU executable files run on both GPUs and CPUs of a node. Basically, calculation on a GPU is much more expensive than on a CPU, therefore we highly recommend to perform a benchmark using one or 2 GPUs to make sure they are getting a maximum performance from the GPU use. Fig.1 shows a benchmark of Si crystal which contains 256 Si-atoms in the simulation box. Blue, black and red lines show simulation time as a function of Number of CPU for GPU=0, 1, and 2 respectively. It shows the performance for GPU=1,2 and CPU=1 is more than 5 times better compared to GPU=0 and CPU=1. However, a comparison of calculations with GPU=1 and GPU=2 indicates that there is not much performance gain from GPU=1 to GPU=2. In fact, use for GPU=2 is around 50% in our monitoring system. Therefore we recommend users to first perform a benchmark like this for their own system to make sure they are not wasting any computer resources.

Building VASP yourself[edit]

If you are licensed to use VASP you may download the source code from the VASP website and build custom versions. See Installing software in your home directory and Installing VASP.

Example of a VASP job script[edit]

The following is a job script to run VASP in parallel using the Slurm job scheduler:

#!/bin/bash

#SBATCH --account=<ACCOUNT>

#SBATCH --ntasks=4 # number of MPI processes

#SBATCH --mem-per-cpu=1024M # memory

#SBATCH --time=0-00:05 # time (DD-HH:MM)

module load intel/2020.1.217 intelmpi/2019.7.217 vasp/<VERSION>

srun <VASP>

- The above job script requests four CPU cores and 4096MB memory (4x1024MB).

- <ACCOUNT> is a Slurm account name; see Accounts and projects to know what to enter there.

- <VERSION> is the number for the VASP version you want to use: 4.6, 5.4.1, 5.4.4 or 6.1.0.

- Use module spider vasp/<VERSION> to see how you can change this particular version.

- <VASP> is the name of the executable. Refer to section Executable programs above for the executables you can select for each version.

#!/bin/bash

#SBATCH --account=<ACCOUNT>

#SBATCH --cpus-per-task=1 # number of CPU processes

#SBATCH --gres=gpu:p100:1 # Number of GPU type:p100 (valid type only for cedar)

#SBATCH --mem=3GB # memory

#SBATCH --time=0-00:05 # time (DD-HH:MM)

module load intel/2020.1.217 cuda/11.0 openmpi/4.0.3 vasp/<VERSION>

srun <VASP>

- The above job script requests one CPU core and 1024MB memory.

- The above job script requests one GPU type p100 which is only available on Cedar. For other clusters, please see the GPU types available.

- The above job uses

srunto run VASP.

VASP uses four input files named as INCAR, KPOINTS, POSCAR, POTCAR. It is best to prepare VASP input files in a separate directory for each job. To submit the job from that directory, use:

sbatch vasp_job.sh

If you do not know how much memory you need for your job, prepare all your input files and then run makeparam in an interactive job submission. Then use the result as required memory for the next run. However, for a more accurate estimate for future jobs, check the maximum stack size used by completed jobs and use this as the memory requirement per processor for the next job.

If you want to use 32 or more cores, please read about whole-node scheduling.